We’ve all heard about data volumes becoming too large to handle effectively.

In fact, according to a recent report, the big data and analytics market is likely to grow at a CAGR of almost 15% between 2023 and 2028. It could be worth well over USD 600 billion by 2028. And while true, it’s only one of the problems. The other relates to the lack of robust data quality management. Data-driven organizations leave no stone unturned to ensure that all their business decisions are backed by healthy data.

What Is Data Quality Management?

Data quality management (DQM) is a set of strategies, methodologies, and practices that provides organizations with trusted data that’s fit for decision-making and other BI and analytics initiatives. It’s a comprehensive and continuous process of improving and maintaining company-wide data quality. Effective DQM is essential for consistent and accurate data analysis, ensuring actionable insights from your information.

In short, data quality management is all about establishing a framework based on strategies that align an organization’s data quality efforts with its overall goals and objectives.

Contrary to popular belief, data quality management is not limited to identifying and correcting errors in the data sets. Therefore, it’s equally important to know what data quality management is not about:

- It’s not only about data correction—it’s only a part of data quality management

- Data quality management is not a one-time fix—it’s an ongoing process, much like data integration is

- It’s not a single-department game—it’s the responsibility of every department that works with data

- It’s not limited to technology and tools—people and processes are key elements of the data quality management framework

- Data quality management is never a one-size-fits-all approach—it should be tailored to achieve business goals

Why Is Data Quality Management Important for Businesses?

It’s like answering why a solid foundation is important to construct a skyscraper. Just like a skyscraper’s stability and longevity are conditional on the quality of material used to build and strengthen its base, an organization’s success depends on the quality of data used to make strategic decisions.

So, it’s safe to conclude that decisions are only as effective as the reliability and accuracy of the data they are based on. And when businesses rely heavily on data to formulate their strategies, allocate resources, understand their target audiences, or even innovate, they must ensure that they only use healthy data. This is precisely what data quality management helps organizations with—it guarantees that all their data-driven initiatives are backed by high-quality data.

Some other reasons why data quality management is important include:

- Data quality management and governance help organizations comply with industry and regulatory requirements.

- If done right, it reduces costs associated with errors and inconsistencies in data and the potential loss of revenue that may result as a consequence.

- Effective data quality management means teams spend less time improving data quality and more time innovating.

The Data Quality Management Lifecycle

Identifying Data Quality Gaps

Organizations must first evaluate their current data landscape. Common assessment methods include data audits, quality scorecards, and benchmarking against industry standards. Without a clear understanding of existing issues, improvement efforts risk being ineffective.

Applying the Right Tools and Processes

Once gaps are identified, organizations must implement the right combination of tools, governance policies, and automation to improve overall data quality management. This includes setting up validation rules, establishing data stewardship roles, and integrating data quality solutions within existing data pipelines.

Monitoring and Continuous Improvement

Data quality isn’t a one-time fix—it requires ongoing monitoring. Automated alerts and dashboards help detect anomalies in real-time, allowing businesses to intervene before data issues spiral out of control.

Integration with Data Governance

Data quality management and data governance are inherently linked. While DQM ensures data accuracy and consistency, governance enforces compliance and security. Without strong governance, data quality efforts become fragmented and ineffective. Businesses must embed DQM within governance frameworks to maintain long-term data integrity and ensure compliance with regulatory requirements.

The Blueprint for Effective Data Quality Management

Effectiveness and sustainability are key when it comes to implementing a comprehensive data quality management strategy. As a first step, it calls for assessing the current state of the organization and its data quality needs. It includes identifying data quality issues and their impact on decision-making and overall operational efficiency. Once there’s clarity on the current state of affairs, an organization will typically make the following journey:

Set Clear Objectives

These objectives serve as strategic guideposts that align the organization’s efforts with its broader business goals. The objectives must be specific and measurable to ensure that all data quality efforts are purposeful, for example, reducing data inaccuracies by a certain percentage.

Set up a Competent Team

When the objectives are defined, the next step to implement data quality management is to establish a cross-functional team of IT professionals, data stewards, and other domain experts. This specialist team outlines processes that will enable the organization to meet its objectives in a timely manner. It also collaborates and defines organizational data quality standards and guidelines that dictate how the teams should handle data within the organization to ensure data reliability and accuracy.

Define Key Metrics

The data quality management team will also identify and define key metrics to measure progress. Data quality metrics not only provide insights into the current state of data quality but also act as a compass to navigate toward predefined objectives. This way, the team can pinpoint areas that require attention and promptly make informed adjustments to its strategies.

Leverage Data Quality Tools

Investing in modern data quality tools will simplify and automate multiple aspects of data quality management. For example, these tools enable users to process large data sets easily instead of manually cleaning and validating data sets. Data quality tools also offer a centralized platform to monitor data quality metrics and track progress, amplifying the organization’s ability to manage data quality proactively. This is why these tools form an integral part of the overall data quality management strategy.

Foster Data Quality Culture

To ensure that data quality management does not remain restricted to a single department, the organization must decide how it plans to foster a culture of data quality across the board. It should include training programs, workshops, and communication initiatives as part of its overall data quality management strategy. Recognizing and rewarding individuals and teams for their contributions to data quality can also play a pivotal role in nurturing a culture that values accurate data as a strategic asset.

Find out how a Senior Data Architect made accurate data accessible to departments across the organization with Astera.

Core Components of Strategic Data Quality Management

The goal with strategic data quality management should be to strike a balance between data consistency and flexibility, while accommodating acceptable variations that normally exist in real-world data. Data quality guidelines specify the formats, the use of standardized codes, and the naming conventions for different data fields. They could also indicate a range of acceptable variation in data. For example, it’s pretty common for addresses to have multiple variations like “Street” and “St.” or “Road” and “Rd”, and so on.

There are several core components that work together to ensure data accuracy. These include:

- Data Profiling: Data profiling is all about understanding business data thoroughly by analyzing its structure, content, and relationships. It’s a systematic process that enables organizations to gain a holistic view of their data’s health by identifying anomalies and inconsistencies that exist therein. Inconsistencies normally include missing values, duplicate records, discrepancies in formatting, outliers, etc. Identifying these data quality issues proactively goes a long way in preventing costly mistakes and potential revenue loss due to decisions based on inaccurate data.

- Data Cleansing: Data cleansing in data quality management is a series of procedures aimed at improving the overall data quality by identifying and eliminating errors and inaccuracies therein. While data profiling only provides information about the health of data without actually altering it, data cleansing involves removing duplicate records, addressing missing values, and rectifying inaccurate data points.

- Data Enrichment: As part of data quality management, data enrichment further enhances the organization’s understanding of its data by providing additional context. It involves adding relevant supplementary information from trusted external sources, which augments the overall value of the data set. Adding more data also improves its completeness. For example, appending demographic information to customer records can be a way to complete the data set and provide a holistic view of customers data.

- Data Validation: The data quality management team defines specific rules and standards that data must conform to before it can be considered valid. For example, if collecting ages, a data validation rule might stipulate that ages must be between 0 and 200. Similarly, the validation rule might require a specific number of digits or a particular pattern for phone numbers to be valid. This way, businesses can ensure that their data meets the defined quality standards before using it for BI and analytics.

- Monitoring and Reporting: Setting up processes to maintain data quality on its own is never sufficient. A sustainable approach needs continuous monitoring and reporting, which requires setting up KPIs and specific metrics. These metrics could include data accuracy rates, data completeness percentages, or the number of missing fields. This way, businesses can identify and proactively address emerging issues before they turn into a bigger problem.

Data Quality Management and Data Governance

Speaking of data quality management, there’s another component of data management that ensures data remains secure and accurate—data governance.

While these concepts are closely related, particularly when it comes to data quality, they serve different purposes. Data governance is a set of policies, standards, and processes to manage and control data across the organization. It involves strategic and organizational aspects of data management, which means that improving data quality is not the main focus. The focus is on managing data effectively and efficiently to achieve organizational goals. However, effective data management in itself requires accurate and reliable data, and this is where data quality management comes in.

Compared to data governance, data quality management focuses explicitly on implementing processes that directly improve and maintain organizational data quality. It involves several activities, such as data profiling and cleansing, among others, that help preserve data quality. For example, data governance defines that healthcare data has to be accurate to support decision-making, and data quality management will implement this policy using data quality tools and other processes.

Suffice to say that both these concepts are essential and complement each other in building an effective data management framework. Regardless of how well-defined data governance policies are, they’ll only look good on paper if data quality management is lacking. Similarly, all it takes to undermine the efforts put in data quality management is inconsistent policies stemming from poor data governance.

The Role of AI and Automation in Modern Data Quality Management

Data quality management (DQM) has always been crucial for informed decision-making and effective business operations. However, the sheer volume and complexity of data in the age of AI have made traditional DQM approaches inadequate. This is primarily due to:

- Hidden data inconsistencies and inaccuracies that go undetected, impacting reporting, analytics, and ultimately, business decisions

- Slow remediation as identifying and fixing data quality problems manually is a lengthy process that affects business agility

- The use of manual and rule-based approaches that struggle to scale and end up creating bottlenecks and increasing the risk of data quality degradation

Today, organizations leverage AI-driven automation to improve data quality management and make the entire process more efficient, proactive, and scalable by:

- Using AI-powered tools to automatically analyze data and identify anomalies and quality issues

- Training machine learning algorithms to identify and correct data errors, inconsistencies, and duplicates with greater accuracy and speed

- Integrating automated data quality systems that continuously monitor data quality in real-time and alert stakeholders in case of any deviations from established standards

The key is to identify specific pain points and leverage AI-powered tools that address those challenges effectively. Organizations must lean toward solutions that offer tangible improvements in areas like data discovery, cleansing, monitoring, governance, and enrichment.

Data Quality Management Is an Ongoing Process

Unlike some processes that are one-time efforts, for example, data migration, data quality management is a continuous process that must adapt to changes in the organization, its data, and technology landscape. And rightly so, given the fast-paced advancements in technology and the ever-increasing reliance on data.

Changing Business Requirements

Organizations evolve over time. From introducing new products and services to implementing leaner, more refined processes, their requirements continue to change. And in this dynamic business landscape, it’s impossible for organizational data to remain static. Activities such as expanding into new markets and mergers and acquisitions lead to changes in data formats and its usage patterns, which can consequently impact data quality management.

Technological Advancements

New tools and technologies are always on the horizon in today’s tech-driven world, waiting to change the way data is collected, stored, and consumed. The adoption of these tools and technologies means an impact on data and its quality. It is, therefore, paramount for those responsible for data quality management to safeguard data integrity as these technologies are integrated into business processes. This would entail adapting data quality management strategies and inculcating a culture of continuous learning to stay up to date with these advancements.

Legacy System Upgrades

Legacy system modernization usually involves migrating data, a whole lot of it, from age-old on-premises systems to the cloud. While it appears to be a one-off process, this data movement will require transforming and validating considerable amounts of data to meet the requirements of the new destination. On top of that, it also includes monitoring data flows to identify and rectify discrepancies as they arise.

To achieve all this and ensure that only healthy data makes its way to the new cloud-based repository, the organization will need reliable data quality management at every step of the process.

Data Volume

And then, we have the case of a never-ending increase in data volume. With big data and IoT in the picture, it can be hard to imagine the scale and speed at which data moves. In fact, the lack of a data quality management system, even for a fraction of a second, would render data useless, be it for decision-making or other BI and analytics initiatives.

Data Quality Management Best Practices

When it comes to data management, and data quality management in particular, there is a set of established guidelines and best practices which represent the culmination of experience, research, and industry knowledge vital for achieving optimal data quality standards. While the list can be long, here are some data quality management best practices:

- Cross-Functional Collaboration: Data quality management without intracompany collaboration is as good as a ship navigating treacherous waters with a crew minus coordination. Data quality management is not the sole responsibility of a single department; it’s a collective effort. Simply put, data-related issues will emerge unchecked without teamwork among different departments. On the contrary, these issues can be identified and addressed early on if key stakeholders collaborate and work together.

- Data Ownership: One of the ways to foster a culture of data ownership is to assign responsibilities for specific data sets. A team designated as a data custodian will feel empowered and motivated to ensure data accuracy throughout its lifecycle. Additionally, data owners can collaborate with other stakeholders to enforce data quality management policies, promoting responsibility and transparency across the organization.

- Data Documentation: Maintaining comprehensive documentation about the data sources, transformations, and quality rules is fundamental to data quality management. This documentation enables organizations to establish a clear lineage that traces the origins of data, helping them understand the data’s journey from its creation to its current state. It also enables them to get insights into how raw data was modified or processed.

- Train Data Users: Providing regular training to employees about the importance of data quality management and their role in maintaining accurate data should be one of the top priorities. Users who are well aware of and understand data quality guidelines will be able to collect, process, and analyze data by applying the best practices. Not only that, when individuals across departments grasp the impact of their data-related actions on others, they are more likely to communicate and collaborate to maintain data accuracy.

- Iterative Improvement: Realizing that data quality management is an iterative improvement is an acknowledgment that it’s not merely a one-time effort but an ongoing journey. Organizations that continue to improve their data quality management efforts per business requirements remain adaptable in the face of evolving data challenges. A commitment to continuous improvement ensures that data quality management strategies stay aligned with the changing landscape as data sources expand and newer technologies emerge.

Simplify Data Quality Management With Astera

Astera is an end-to-end data management solution powered by automation and artificial intelligence (AI). Astera offers built-in features that simplify data quality management for all types of users, regardless of their technical knowledge or expertise. From data profiling to validating data to setting data quality rules, everything is a matter of drag-and-drop and point-and-click.

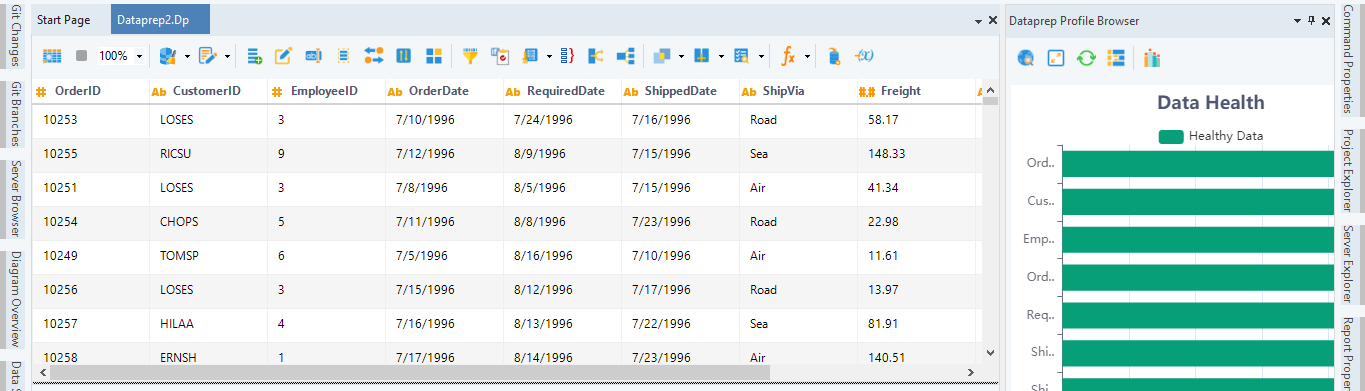

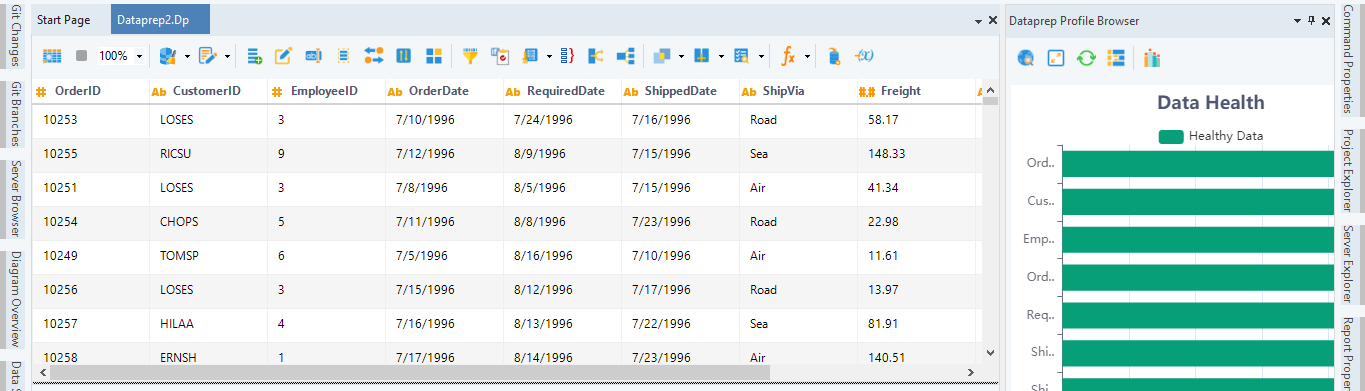

Data Quality Management – Data health displayed in Astera’s UI

But that’s not all. Astera also features real-time health checks. These are interactive visuals that provide profile of the entire data set, as well as individual columns, so users can directly identify data quality issues like the number of missing fields, duplicate records, etc.

Ready to take the first step towards healthy data? Contact us or get in touch with one of our data solutions experts at +188877ASTERA.

Authors:

Khurram Haider

Khurram Haider

March 27th, 2025

March 27th, 2025