A Deep Dive into Data Reliability & What It Means for You

It’s no secret that data is an invaluable asset. It powers analytical insights, provides a better understanding of customer preferences, shapes marketing strategies, fuels products or services decisions…the list goes on. Having reliable data cannot be overemphasized. Data reliability is a crucial aspect of data integration architecture that cannot be overlooked. It involves ensuring that the data being integrated is accurate, consistent, up-to-date, and has been shipped over in the correct order.

Failure to guarantee data reliability can result in inaccurate reporting, lost productivity, and lost revenue. Therefore, businesses must implement measures to verify the reliability of integrated data, such as conducting data validation and quality checks, to ensure its trustworthiness and effective usability for decision-making.

This article will help you thoroughly understand how to test for reliable data, and how data cleansing tools can improve its reliability. We’ll also discuss the differences between data reliability and data validity, so you know what to look out for when dealing with large volumes of information. So, let’s get started and dive deeper into the world of data reliability!

What Is Data Reliability?

Data reliability helps you understand how dependable your data is over time — something that’s especially important when analyzing trends or making predictions based on past data points. It’s not just about the accuracy of the data itself, but also ensuring consistency by applying the same set of rules to all records, regardless of their age or format.

If your business relies on data to make decisions, you need to have confidence that the data is trustworthy and up to date. That’s where data reliability comes in. It is all about determining the accuracy, consistency, and quality of your data.

Making sure that the data is valid and consistent is important to ensure data reliability. Data validity refers to the degree of accuracy and relevance of data for its intended purpose, while data consistency refers to the degree of uniformity and coherence of data across various sources, formats, and time periods.

What determines Reliability of data?

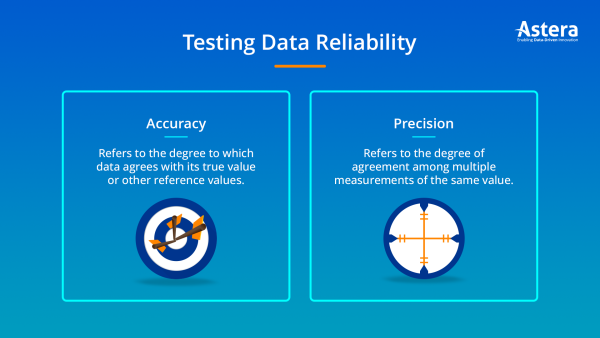

Accuracy and Precision

The reliability of data depends greatly on its accuracy and precision. Accurate data corresponds closely to the actual value of the metric being measured. Precise data has a high degree of exactness and consistency.

Data can be precise but not accurate, accurate but not precise, neither, or both. The most reliable data is both highly accurate and precise.

Collection Methodology

The techniques and tools used to gather data significantly impact its reliability. Data collected through a rigorous scientific method with controlled conditions will likely be more dependable than that gathered through casual observation or self-reporting. Using high-quality, properly calibrated measurement instruments and standardized collection procedures also promotes reliability.

Sample Size

The number of data points collected, known as the sample size, is directly proportional to reliability. Larger sample sizes reduce the margin of error and allow for stronger statistical significance. They make it more likely that the data accurately represents the total population and reduces the effect of outliers. For most applications, a sample size of at least 30 data points is considered the minimum for reliable results.

Data Integrity

Reliable data has a high level of integrity, meaning it is complete, consistent, and error-free. Missing, duplicated, or incorrect data points reduce reliability. Performing quality assurance checks, validation, cleaning, and deduplication helps ensure data integrity. Using electronic data capture with built-in error checking and validation rules also promotes integrity during collection.

Objectivity

The degree of objectivity and lack of bias with which the data is collected and analyzed impacts its reliability. Subjective judgments, opinions, and preconceptions threaten objectivity and should be avoided. Reliable data is gathered and interpreted in a strictly impartial, fact-based manner.

In summary, the most dependable data is accurate, precise, collected scientifically with high integrity, has a large sample size, and is analyzed objectively without bias. By understanding what determines reliability, you can evaluate the trustworthiness of data and make well-informed decisions based on facts.

Testing Data Reliability

Data reliability is an essential concept to consider when dealing with a large data set.

Hence, the data you have must be trustworthy and should lead to meaningful results. To test whether your data is reliable or not, there are two primary tests: accuracy and precision.

- Accuracy: Refers to the degree to which data agrees with its true value or other reference values. For example, if you measure something five times and report a reading of 10 cm every time. This indicates high accuracy as all your readings are consistent with each other.

- Precision: Refers to the degree of agreement among multiple measurements of the same value. If you measure something five times and report values of 9cm, 10cm, 10cm, 11cm, and 13cm respectively. This suggests that your readings are precise but not necessarily accurate. They all vary from the true value (10cm).

Linking Data Reliability and Validity

When you’re dealing with data, it’s important to understand the relationship between data reliability and data validity. Data reliability means that it is accurate and consistent and gives you a dependable result, while data validity means that it is logical, meaningful, and accurate.

Think of reliability as how close results are to the true or accepted value, while validity looks at how meaningful the data is. Both are important – reliability gives you accuracy, while validity makes sure it’s actually relevant.

The best way to ensure that your data is reliable and valid? Make sure you do regular maintenance on it. Data cleansing can help you achieve that!

Benefits of Reliable Data

Data reliability refers to the accuracy and precision of data. For data to be considered reliable, it must be consistent, dependable and replicable. As a data analyst, data reliability is crucial to keep in mind for several reasons:

Higher Quality Insights

Reliable data leads to higher quality insights and analysis. When data is inconsistent, inaccurate or irreproducible, any insights or patterns found cannot be trusted. This can lead to poor decision making and wasted resources. With reliable data, you can have confidence in the insights and feel assured that key findings are meaningful.

Data-Driven Decisions

Data-driven decisions rely on reliable data. Leaders and managers increasingly depend on data analysis and insights to guide strategic decisions. However, if the underlying data is unreliable, any decisions made can be misguided.

Data reliability is key to truly data-driven decision making. When data can be trusted, data-driven decisions tend to be more objective, accurate and impactful.

Reproducible Results

A key characteristic of reliable data is that it produces reproducible results. When data is unreliable, repeating an analysis on the same data may yield different results. This makes the data essentially useless for serious analysis.

With reliable, high-quality data, re-running an analysis or test will provide the same insights and conclusions. This is important for verification of key findings and ensuring a single analysis was not an anomaly.

In summary, data reliability is essential for any organization that depends on data to shape key business decisions and strategies. By prioritizing data quality and reliability, data can be transformed into a true business asset that drives growth and success. With unreliable data, an organization is operating on questionable insights and gut instinct alone.

The Role of Data Cleansing in Achieving Reliable Data

Data cleansing plays a key role in ensuring data reliability. After all, if your data is polluted by errors and inaccuracies, it’s going to be difficult to trust the results you get from your analysis.

Data cleansing usually involves three main steps:

- Identifying faulty or inconsistent data – This involves looking for patterns in the data that indicate erroneous values or missing values, such as blank fields or inaccurate records.

- Correcting inconsistencies – This can involve techniques such as normalizing data and standardizing formats, as well as filling in missing information.

- Validating the correctness of the data – Once the data has been cleansed, it’s important to validate the results to ensure that they meet the accuracy levels you need for your specific use case. Automated data validation tools can streamline this step.

Data reliability can be difficult to achieve without proper tools and processes. Tools like Astera Centerprise offers various data cleansing tools that can help you get the most out of your data.

Conclusion

Data reliability is not only about data-cleaning, but a holistic approach to data-governance. Ensuring data reliability requires business leaders to make a conscious effort, making it easier said than done. Data validity tests, redundancy checks, and data-cleansing solutions are all effective starting points for achieving data reliability.

Astera Centerprise helps achieve this by offering the best data-cleansing solutions to get ahead of data-reliability problems. This powerful data integration and management platform ensures accurate, consistent, and reliable data. It achieves this through its data quality features. These features help profile, cleanse, and standardize data.

Additionally, its validation capabilities ensure that data meets quality standards. Robust data governance capabilities in Astera Centerprise enable automated data quality checks and consistency across data elements. Further, the platform’s integration capabilities connect to various data sources, creating a single source of truth for data.

So, whether you’re looking to improve your data quality, manage your data assets more effectively, or streamline your data integration processes, Astera Centerprise has everything you need to succeed.

How Astera Centerprise Helps Improve Your Data Reliability

Astera Centerprise offers solutions for achieving reliable data. With code-free self-service tools for data cleaning, this platform ensures that data inputted, stored, and outputted remains consistent and accurate. This includes data integration, transformation, quality, and profiling, allowing for data cleaning, validation, standardization, and custom rule definitions.

Tools provided by Astera Centerprise to master your data reliability needs:

- Data integration: Connect different types of structured & unstructured data sources & automate the flow of your data pipelines.

- Data transformation: Cleanse & transform your source to target datasets while maintaining lineage & auditing functionalities.

- Data quality: Streamline various aspects of data profiling to enforce consistency in your datasets & identify inconsistencies or anomalies quickly.

- Data profiling: Analyze the structure, completeness, accuracy, and consistency through automated or manual processes, depending on dataset complexity.

Thus, Astera Centerprise empowers business leaders with a suite of powerful tools to ensure their source material is up to quality so they can better trust their results downstream. With features like data validation and profiling built-in, it helps scrutinize source data for quality, integrity, and structure at any stage in their ETL process—allowing customers to maintain high levels of accuracy throughout the transformation process, all without writing any code.

Astera AI Agent Builder - First Look Coming Soon!

Astera AI Agent Builder - First Look Coming Soon!