Overcoming Snowflake Challenges – A Practical Guide

Have you ever felt like a snowflake in the middle of a raging snowstorm? That’s how it can feel when trying to grapple with the complexity of managing data on the cloud-native Snowflake platform. Too often, teams face Snowflake challenges. They range from managing data quality and ensuring data security to managing costs, improving performance, and ensuring the platform can meet future needs. However, tackling these problems doesn’t have to be so overwhelming.

In this guide, we provide you with practical steps for overcoming each of these challenges. So that you can build a reliable and resilient Snowflake environment. We’ll provide advice on topics such as data governance, choosing between ETL and ELT, integrating with other systems, and more. So, let’s get started!

What is Snowflake?

If you’re here, you’ve probably heard of Snowflake and maybe even wondered how it could help your organization. Snowflake is a modern cloud-based data platform that offers near-limitless scalability, storage capacity, and analytics power in an easily managed architecture. Snowflake’s core components are the cloud-based compute node (Snowflake Compute Cloud) and the database schema for storing data (Snowflake Data Warehouse).

This combination lets you store, query, and analyze all your structured and unstructured data. No matter where it lives, without worrying about managing server hardware or software. Additionally, Snowflake allows you to easily set up secure data sharing with other companies or partners.

From managing data quality to ensuring data security and governance to improving performance, Snowflake provides various solutions for tackling the most common challenges associated with data management. By taking advantage of this powerful platform, organizations can focus on what matters most: collecting meaningful insights from their data.

Find out the top 7 Snowflake ETL tools.

Common Snowflake Challenges

Snowflakes can present a number of challenges, but the good news is these difficulties can be overcome. The most common snowflake challenges are:

Poor Data Quality

Low data quality leads to incomplete or incorrect data sets, which will make it difficult for you to analyze your data and make decisions based on it. To address this issue, check the source of your data and clean out any inconsistencies and errors. Additionally, perform data validation checks to ensure that each input follows the rules you’ve set up and that all outputs are consistent.

Lack of Flexibility

Lack of flexibility in systems can prevent you from making the kinds of changes needed to keep up with dynamic business environments. To ensure your system is flexible enough to accommodate changes, try using an agile approach when implementing new solutions—this way you won’t have to start from scratch every time something needs to be modified. Additionally, use an automated system so that manual processes aren’t bogging down your operations.

Overly Complicated Systems

Overly complicated systems can lead to inefficient processes that take too much time and effort to complete. In order to tackle this challenge, review your current systems for unnecessary complexity and look for ways you can simplify them. Additionally, focus on user-friendliness so that users don’t get overwhelmed when interacting with the system.

By addressing these common snowflake challenges, you’ll be well-equipped to conquer any roadblocks in order to achieve a successful snowflake implementation

Overcoming Snowflake Challenges: Ensuring Data Quality

Data is only useful if it’s accurate and up to date. That’s why ensuring data quality is one of the critical challenges when it comes to managing data in Snowflake. Here are some useful tips to help you keep your data clean:

- Identify data sources: Know where your data is coming from, so you can make sure it’s accurate. Trustworthy sources are essential for ensuring good data quality.

- Validate data input: Put checks in place to ensure that all incoming data is valid and up to date. This way, you can rest assured that your data will be as accurate as possible.

- Monitor regularly: Regularly check for any discrepancies and errors in the datasets so that you can identify and address them promptly.

- Automate the process: Automation tools can help streamline the process of monitoring and validating data, making it easier for you to ensure high quality at scale.

With these tips, you can keep your Snowflake databases clean and up to date!

Overcoming Snowflake Challenges: Securing and Governing Data

Managing data security and governance in a Snowflake environment is a snowflake challenge many organizations face. While Snowflake provides strong security mechanisms, including multi-factor authentication, encryption at rest, and secure zone isolation, data governance in the cloud is still a challenge.

Data governance is essential for compliance with industry regulations and other external standards. But it’s also important to ensure that users always get the right information and that it’s kept accurate.

Here are some ways to overcome these challenges:

Data Catalogs

Using data catalogs to manage your organization’s data assets can help you organize your data, define clear access rules, track usage history, and monitor any changes or modifications to the metadata. This makes it easier to audit activity in the Snowflake environment and stay compliant with external standards such as GDPR or HIPAA.

Data Governance Tools

Using specialized tools like Collibra or Alation makes it easier to define metadata definitions; reduce data inconsistencies; identify field relationships; monitor KPIs such as quality scores; enable audit trails; detect duplicate fields; track usage history; ensure sensitive information stays secure; manage access control policies, etc.

Automated Tests

Automated tests can ensure that your data is accurate and consistent across different systems after an ETL/ELT job has been run in Snowflake or when refreshed from other sources. This helps maintain accuracy for all metrics being reported.

Overcoming Snowflake Challenges: Managing Costs

When using a Snowflake database, managing costs can be a tricky subject. If you are not careful, running your Snowflake instance can get quite expensive. That being said, there are many things you can do to ensure that you minimize costs and keep your bills to a minimum.

Here are some of the key points to consider:

- Choose a billing model: It is important to decide if you want a pay-as-you-go model or commit to a fixed cost plan based on estimates. The pay-as-you-go model could be more cost-effective in the short term. However, the fixed plan might have more savings in the long run.

- Monitor usage: It is crucial to stay on top of your data usage and regularly review it. This is to track any unexpected spikes in data usage that could mean an increase in costs.

- Scale up judiciously: Ensure that your scaling is done when needed. Also, do not use an unnecessarily large system as it will increase billings significantly.

- Turn off services when not needed: Consider turning off services like data pipelines and warehouses when they are not actively used. This will help reduce unnecessary costs from running them 24/7 without use.

- Take advantage of discounts: Snowflake offers various discounts such as for active users, volume discounts, and much more. These should be taken advantage of if available for further cost reduction opportunities.

Optimizing Performance of Snowflake

The performance of Snowflake can be further optimized by taking certain steps, such as:

Leverage Clustering Keys

Clustering keys should be used to organize data in micro-partitions. This helps query performance, especially when it comes to joins. Additionally, micro-partitions are automatically compressed, which reduces storage usage.

Utilize Result Caching

Result caching stores the results of queries for multiple users based on a set time frame. This eliminates the need to rerun queries and hence helps improve overall query performance.

Create Tuned Queries

Snowflake’s query compiler provides many amazing features that can optimize your SQL code and help reduce the time needed to run a particular statement. All SQL statements should be tuned and tested thoroughly in order to ensure optimal performance.

Use SnowPipe and Automate Data Loading

Snowpipe is a feature that automates data loading into tables. It also keeps them up to date with new incoming data. It is an automated control system that helps reduce latency times significantly. Moreover, it increases throughput by loading data in batches as soon as they are available.

Overall, optimizing the performance of Snowflake requires tuning queries, leveraging clustering keys, utilizing result caching, creating tuned queries. Moreover, it involves using SnowPipe to automate the loading of new data or keeping tables up to date with new incoming data. These steps will ensure that your organization’s data platform runs at peak efficiency for maximum cost savings, improved user experience, and peak uptime availability for data consumers.

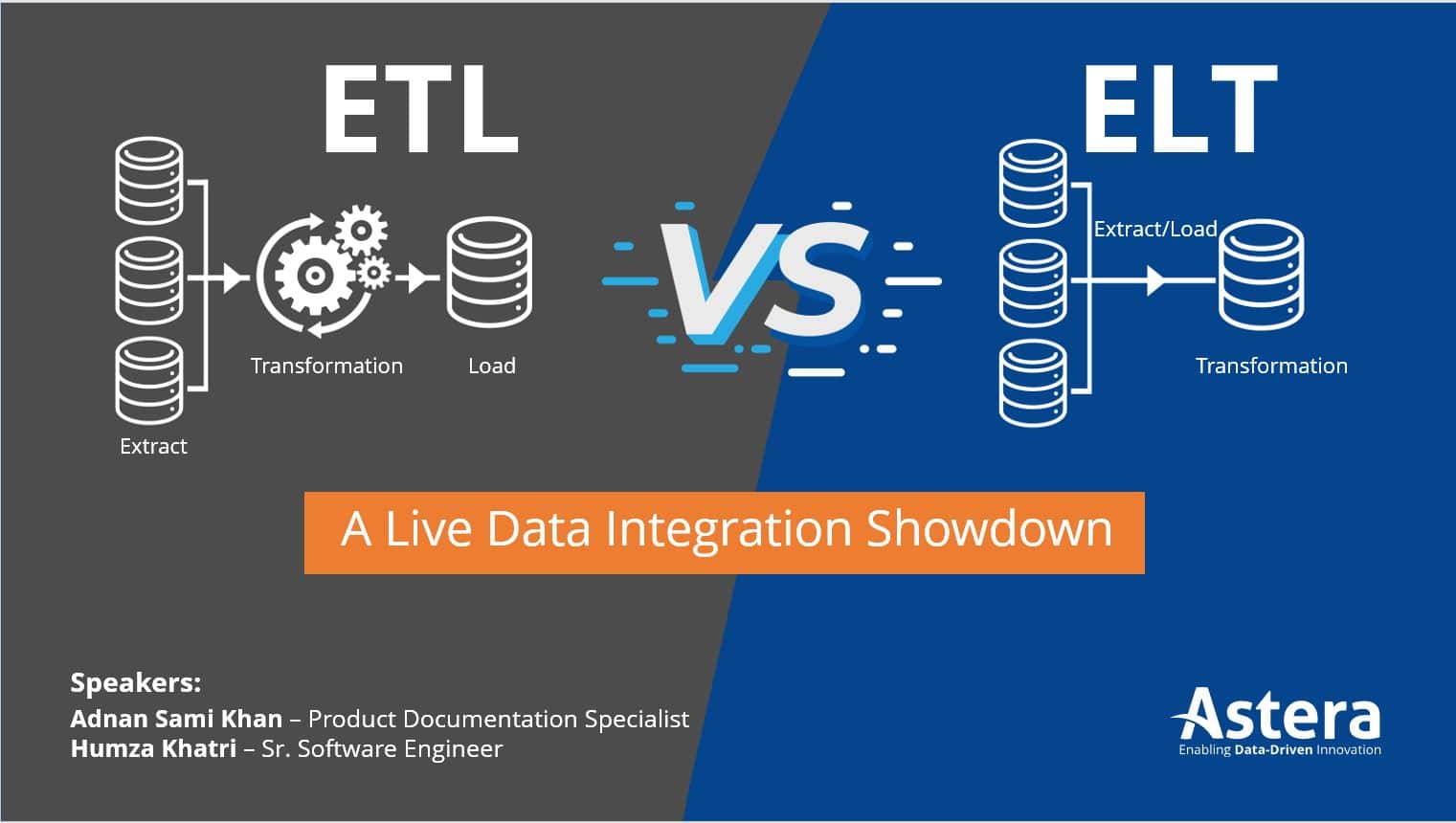

Choosing Between ETL vs. ELT in a Snowflake Environment

Have you been trying to decide between ETL vs. ELT when working with Snowflake? Whether you’re new to the platform or an experienced user, it’s important to understand the key differences between the two.

Extract, Transform, and Load (ETL)

ETL is a traditional data processing solution that extracts data from source systems, transforms it into a format usable in a target system. It then loads it into the data warehouse. To do this, you must plan each step of your data pipeline. This includes extracting from source systems to loading your transformed data into Snowflake. The most significant advantage of ETL is that you can take control of your data pipelines by defining exactly which steps need to be taken for specific tasks.

Extract, Load, and Transform (ELT)

On the other hand, ELT takes advantage of the scalability and parallelism of the cloud-native architecture in Snowflake. In ELT pipelines, your raw data gets loaded quickly into Snowflake before being transformed into usable formats. This is especially useful if you’re dealing with large volumes of unstructured or semi-structured data, such as JSON files. ELT minimizes complexity by allowing you to leverage Snowflake’s built-in transformation functions after loading your data.

The biggest benefit of using ELT is that it speeds up processes by eliminating intermediate steps often associated with ETL pipelines. It does this while still allowing complete control over how your source data is loaded and transformed in Snowflake.

Ultimately, there’s no one-size-fits-all answer when deciding between ETL and ELT — it all depends on the use-case.

Conclusion

Snowflake challenges can often seem daunting and insurmountable, but by taking the time to understand the data landscape, establish processes and governance, and properly utilize the data tools available, these challenges can be easily navigated.

While there are various tools available in the market that can help with these challenges, Astera Centerprise stands out with its powerful data integration capabilities. Its capabilities allow businesses to connect to Snowflake and other data sources seamlessly. This end-to-end data integration tool allows businesses to design, execute, and monitor complex workflows. It also allows businesses to automate data quality checks, and optimize performance and cost efficiency.

By leveraging the benefits of Astera Centerprise, organizations can focus on collecting meaningful insights from their data while ensuring that it is accurate, secure, and compliant with industry regulations.

Overall, Astera Centerprise is an ideal solution for businesses that need to manage their data on the cloud-based Snowflake platform. With its user-friendly, no-code platform, Astera Centerprise helps organizations tackle many challenges associated with data management. It frees up valuable time and resources to focus on what really matters – deriving insights from data and driving business success.

NEW RELEASE ALERT

NEW RELEASE ALERT

March 27th, 2025

March 27th, 2025