Your Comprehensive Guide to Data Processing

In 2025, the total amount of data stored globally is 200 zettabytes. For context, that’s enough to fill up 1 trillion iPhones. We get it. These numbers may sound overwhelming. But, with great data, comes great responsibility.

Data’s real value lies in how well it is being processed. In fact, effective data processing is critical for companies to gain access to valuable insights and maintain a competitive edge.

Therefore, understanding the importance of data processing according to best practices can help enterprises identify new avenues of growth and success.

In this blog, we’ll discuss data processing, its different stages, types, technologies, and applications. Lastly, we’ll also go over how Astera helps enterprises around the world convert their data into insights with robust data processing.

What is Data Processing?

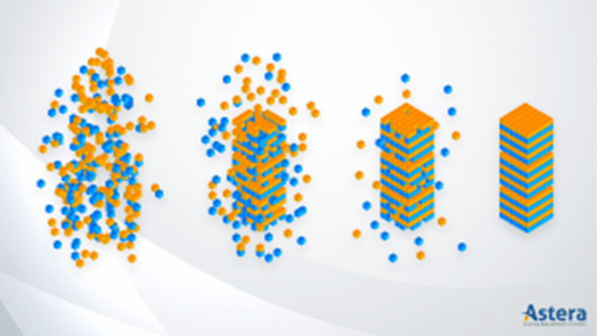

Data processing is an umbrella term that refers to any and all processes involved in transforming raw data into valuable information.

Who does it?

Data scientists typically process data, which includes collecting, organizing, cleaning, validating, analyzing, and converting it into suitable formats such as graphs or documents. Generally speaking, data processing can be done using three methods i.e., manual, mechanical, and electronic.

Why do it?

The idea is to increase the value of information and facilitate decision-making. This enables businesses to improve their operations and make timely strategic decisions. Automated data processing solutions leveraging AI and ML technologies play a significant role in this.

In short, data processing turns large amounts of data, including big data, into meaningful insights for effective and timely management and decision-making.

Cut down your data processing time from hours to minutes with Astera

Data processing is important yes but it doesn't have to be slow. Try out a smarter way to process your data.

Try Astera.The Six Stages of the Data Processing Cycle

The data processing cycle outlines the steps that one needs to perform on raw data to convert it into valuable and purposeful information. This process involves the following six stages:

1. Data Collection

Data is gathered from reliable sources, including databases such as data lakes and data warehouses. It is crucial that the data sources are accurate, dependable, and well-built to ensure that the data collected and the information gathered are of superior quality and functionality.

2. Data Preparation

The data collected in the first stage is then prepared and cleaned. In this stage, also referred to as “pre-processing,” the raw data is organized to assist in implementing further stages. Data cleaning or preparation involves eliminating errors, removing noise, and eliminating bad data (inaccurate or incorrect data) to sort it out into high-quality data.

3. Data Input

This is the stage in which raw data starts to take an informational form. During this stage, clean data is entered into a system or destination (such as a data warehousing solution like Astera Data Warehouse Builder or CRM like Salesforce). This is done by translating it into a language, that the system can understand, either manually or through input devices set up to collect structured or unstructured data.

4. Data Processing

This stage involves processing data for interpretation using machine learning algorithms, and artificial intelligence algorithms. The actual process may differ based on the source of data (data lakes, social networks, connected devices), and its intended use or purpose (deriving patterns and trends, determining solutions or strategies, and optimization).

5. Data Output

In the data output stage, also referred to as the data interpretation stage, the processor translates and presents data in a readable data format, such as documents, graphs, images, etc. Now the data is usable by all members of the organization, and not only data scientists, to help them in their respective data analytics projects.

6. Data Storage

This final stage of the cycle involves storing the processed data for future use. This step takes place after using the information required for immediate implementations and insights. In this stage, organizations store data for reference purposes or to allow easy and quick access to members of the organization for future use.

7 Types of Data Processing

As we discussed before, data processing is an umbrella term for numerous processes. Depending on the specific use case, different methods may be employed, which is why we have as many as 7 different types of data processing that are commonly used globally.

Each type serves a different purpose, and their implementation largely depends on the available data and the organization’s specific needs.

1. Batch Processing

The system breaks down a large amount of data into smaller units/batches before collecting and processing it. This allows for the smooth handling of large data volumes during off-peak hours for resource optimization and minimum impact on daily operations.

Example: Banks process non-urgent transactions and checks overnight. This ensures account balances are updated in one go for maximum accuracy and efficiency.

2. Real-time Processing

As the name suggests, this type of processing is used when time is of the essence. It typically involves processing and transferring data as soon as the system obtains it, to assist in rapid decision-making.

Example: Navigation systems utilize real-time processing to provide turn-by-turn directions and account for traffic conditions and route changes in real-time.

3. Online Processing

Online processing allows data to be processed interactively over a network, generating instant responses with continuous input and output. This type of data processing allows systems to handle user requests instantly over the internet, making it essential for e-commerce and other online services.

Example: Banks use online processing to process financial transactions in real-time, allowing users to transfer funds, pay bills, and check account balances.

4. Parallel Processing (Multiprocessing)

This type involves utilizing multiple processing units to distribute data processing among them, while ensuring coherent execution. Parallel processing is useful when performing complex tasks, allowing for processing to be done efficiently through concurrent tasks.

Example: Smartphones perform multiple tasks, such as GPS navigation, video streaming, and phone calls simultaneously.

5. Automated Processing

This type of processing utilizes software that can automate routine tasks involved in data processing. Automated processing can reduce the need for manual input and increase overall efficiency.

Example: Automated billing systems can automatically calculate and charge customers to streamline billing operations and reduce manual input.

6. Cloud Computing

Cloud computing lets organizations utilize computing resources over the internet, offering scalability and flexibility. These resources can include servers, storage, databases, and processing units, which can be accessed as needed without having to install and maintain additional IT infrastructure.

Example: Astera offers data prep in the cloud to help organizations perform data preparation tasks without having to invest in additional resources.

7. Distributed Processing

Distributed processing, similar to multiprocessing, utilizes multiple computers or devices to improve processing efficiency. By utilizing the collective capacity of multiple systems, this type of processing can handle large-scale tasks efficiently.

Example: The distributed processing model is used by video streaming services such as Netflix to process and deliver content efficiently. This is done by storing the videos on multiple servers to provide quick access and smooth playback.

Tired of processing your data the old way? Try the Astera way!

Astera's no-code solution streamlines your data processing efforts.

View Demo to See How Astera Can HelpTechnologies Used in Data Processing

Multiple technologies are used in the different stages of data processing. In this section, we’ll look at the most important ones, such as data warehouses, Machine Learning (ML) algorithms, cloud technology, and analytics platforms.

ML and AI Algorithms

Machine Learning (ML) and Artificial Intelligence (AI), collectively referred to as Deep Learning, are technologies that power most modern data processing solutions. ML and AI algorithms uncover patterns and make predictions based on available data. Python, SAS, and R are some of the most commonly used ML languages.

These algorithms are also used for automating processes such as data collection, preparation, analytics, anomaly detection, etc.

For instance, Astera’s data integration solution uses AI mapping to automatically map relevant fields between different sources and destinations in a data pipeline. This can save considerable time and effort especially for complex workflows.

Cloud Technologies

Cloud computing offers unprecedented scalability and flexibility, allowing organizations to scale their data processing up or down according to their specific needs.

The use of cloud technologies has also made analytics and BI platforms accessible for smaller businesses as they no longer need to set up big data centers to process their data.

Databases, Data Warehouses, & Data Lakes

Databases are crucial for storing structured data, but they do more than just store it. In addition to storage, databases, data warehouses, and data lakes allow users to query, update, and retrieve information efficiently.

Databases are typically built on SQL (Structured Query Language), and popular examples of databases include MySQL, SQL Server, and PostgreSQL.

Data warehouses and data lakes, on the other hand, are storage systems designed for large-scale operations. They are designed to connect to various sources and can be optimized to query and analyze large datasets. Data warehouses and data lakes are typically used to support analytics and business intelligence (BI) initiatives.

Applications of Data Processing

Effective data processing can be crucial in various industries. It enables organizations to make informed decisions, streamline operations, and improve overall efficiency. Let’s look at some of the domains where data processing is already crucial or increasingly becoming so.

1. Healthcare and Life Sciences

Healthcare organizations rely on data processing to manage electronic health records (EHR), process insurance claims, and analyze medical imaging. For instance, hospitals use AI-driven data processing to detect anomalies in medical scans, helping doctors diagnose diseases like cancer at an early stage. In addition, healthcare providers have to process patient data to personalize treatment plans and enhance overall patient care.

2. Finance & Banking

The financial sector relies heavily on real-time and batch data processing to detect fraudulent transactions, assess credit risk, and automate trading strategies. Fraud detection systems use machine learning algorithms to analyze transaction patterns and identify potential threats.

Plus, banks rely on data processing for accurate account reconciliation, loan approvals, and predictive analytics for investment strategies.

3. Retail & E-Commerce

Retailers utilize data processing to analyze customer preferences, optimize inventory management, and personalize marketing campaigns. Transactional data, customer behavior patterns, and supply chain logistics can be analyzed to streamline operations.

For example, an e-commerce platform may use data processing for recommendation engines, dynamically displaying products based on a customer’s browsing history and purchase behavior.

4. Manufacturing & Supply Chain Management

Manufacturers leverage data processing to optimize production lines, forecast demand, and prevent equipment failure. Through predictive maintenance powered by machine learning, companies can analyze sensor data from machinery and preemptively address potential issues before they cause downtime.

Additionally, real-time data analytics help companies optimize supply chain logistics by predicting inventory needs and reducing waste.

5. Government & Public Sector

Governments worldwide leverage data processing for effective policymaking, public administration, and service delivery. From population statistics and census data analysis to public safety and infrastructure management, data processing enables informed decision-making and efficient resource allocation.

Law enforcement agencies may also use real-time data analytics for crime prediction and prevention.

6. Telecommunications & Media

Telecommunications companies use data processing for network optimization, billing, and customer analytics. Content providers and streaming platforms leverage it to recommend personalized content based on user preferences and viewing history.

7. Education & Research

Educational institutions use data processing to analyze student performance, tailor curriculum design, and improve administrative processes. Universities also use data analytics to track student progress, identify learning gaps, and enhance their educational offerings.

8. Transportation & Logistics

Logistics companies rely on real-time data processing for fleet management, route optimization, and demand forecasting. This ensures timely deliveries, cost savings, and improved customer satisfaction. Airlines, for instance, use data processing to optimize flight routes, improve fuel efficiency, and enhance passenger experiences.

The Future of Data Processing

Data processing is undergoing something of a revolution, thanks to the rapid pace at which AI technologies are advancing. Combine this with the scalability of cloud computing, and the limits of how much and to what extent data can be processed are being pushed.

This not only means that organizations big and small can leverage data processing for better decision-making, but it also means that doing so is much more cost-effective and efficient.

These technological advancements also mean that data processing solutions are becoming much more sophisticated. For instance, features such as repetitive task automation, self-adapting data pipelines, data preparation in the cloud, etc., are empowering data users around the world.

That’s where Astera comes in.

Start Your Data Processing Journey with Astera

Automated data processing is the way forward as its manual counterpart has become redundant. It allows for sustainable solutions with reduced chances of errors, minimal execution time, and lower investment.

Businesses are now relying more on quality data, and this need will continue to increase. Data automation streamlines business operations by removing repetitive manual tasks, enabling you to focus on business growth. Automated data processing further helps business users make critical business decisions promptly in real-time.

Astera utilizes technology that accurately and efficiently prepares, cleanses, validates, and stores data. It enables faster innovation and the availability of reliable data at each step. Our data integration solution allows data automation through job scheduling, AI-powered mapping, automated data pipelines, and much more.

Book a personalized demo today to see what Astera can do for your organization.

Astera AI Agent Builder - First Look Coming Soon!

Astera AI Agent Builder - First Look Coming Soon!