The Best Data Ingestion Tools in 2024

Data ingestion is important in collecting and transferring data from various sources to storage or processing systems.

In this blog, we compare the best data ingestion tools available in the market in 2024. We will cover their features and pros and cons to help you select the best software for your use-case.

What is Data Ingestion?

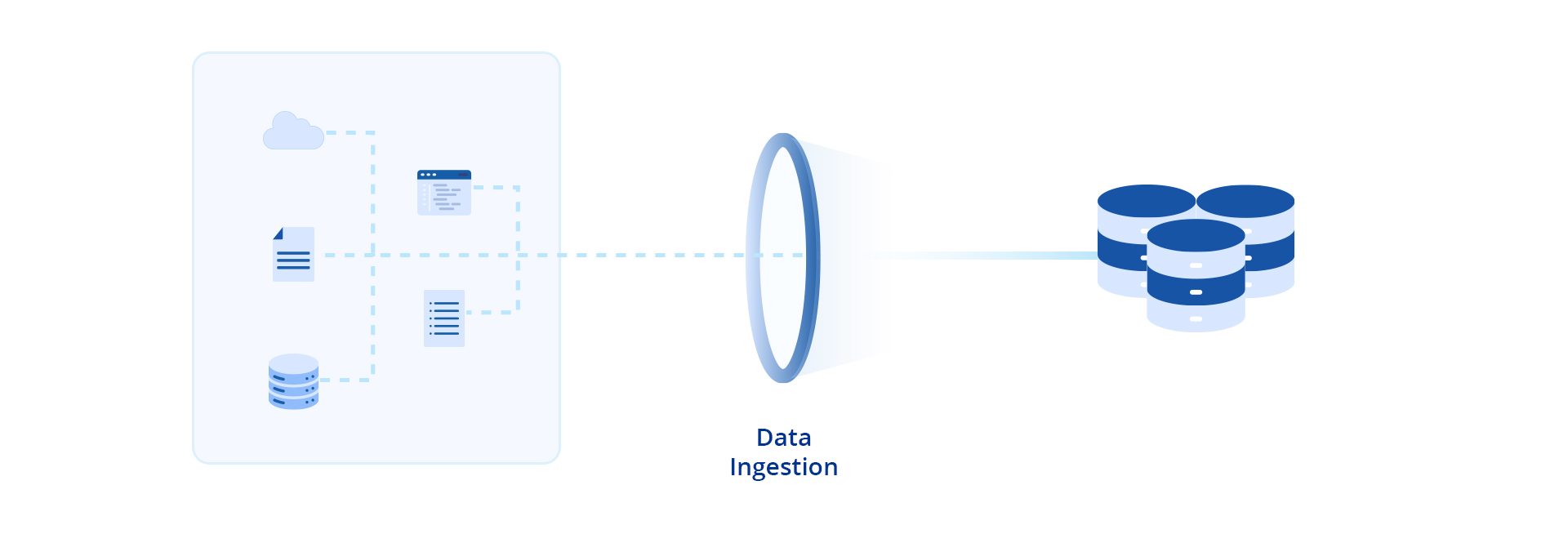

Data ingestion is collecting and importing data from various sources into a database for further analysis, storage, or processing. This process can handle data in two primary modes: real-time processing, where data is ingested and processed immediately as it is generated. The other is batch processing, where data is collected at specific intervals and processed simultaneously.

What are Data Ingestion Tools?

Data ingestion tools are software and solutions designed to automate data transfer from various sources, like cloud storage, file systems, etc., to designated storage or analysis systems. These data ingestion tools streamline data gathering, reduce the need for manual intervention, and enable organizations to focus more on data analysis and insight generation.

There are different types of data ingestion tools, each catering to the specific aspect of data handling.

- Standalone Data Ingestion Tools: These focus on efficiently capturing and delivering data to target systems like data lakes and data warehouses. They offer features like data capture, batch and real-time processing, and basic data transformation capabilities. While standalone data ingestion tools can fit data ingestion-specific use cases, organizations prefer solutions that are more flexible.

- ETL (Extract, Transform, Load) Tools: While ETL tools can handle the overall data integration process, they are also often used for data ingestion.

- Data Integration Platforms: Data integration platforms offer multiple data handling capabilities, including ingestion, integration, transformation, and management.

- Real-Time Data Streaming Tools: These tools ingest data continuously as it appears, making it available for immediate analysis. They are ideal for scenarios where timely data is critical, like financial trading or online services monitoring.

Benefits of Data Ingestion Tools

Data ingestion tools offer several benefits, including:

- Faster Data Delivery: Automating data ingestion with the help of tools speeds up processing, enabling more efficient scheduling.

- Improved Scalability: Automated data ingestion tools facilitate adding new data sources as the company grows and allow for real-time adjustments to data collection processes.

- Data Uniformity: Using data ingestion tools enables the extraction of information and converting it into a unified dataset. Organizations can use this information for business intelligence, reports, and analytics.

- Easier Skill Development: Data ingestion tools are designed with non-technical users in mind and often feature simplified interfaces that make learning and using them easier.

How Do Data Ingestion Tools Work?

Data ingestion tools help move data from various sources to where it can be stored and looked at. These tools utilize multiple protocols and APIs like HTTP/HTTPS, ODBC, JDBC, FTP/SFTP, AMQP, and WebSockets to efficiently connect with and transfer data from sources such as databases, cloud storage, files, and streaming platforms.

First, these tools collect data from the sources using set commands or custom ones to find the data. Sometimes, data from different sources might be in different formats or structures. So, the data ingestion tools transform data to ensure consistency in format and structure. Then, these tools put this data into databases or data warehouses for analysis.

Data ingestion tools also offer data movement directly into the destination system for situations where loading the data as quickly as possible is the priority.

Why are Data Ingestion Tools Important?

Data comes in many forms and from many places. A business might have data in cloud storage like Amazon S3, databases like MySQL, and coming in live from web apps. Transferring this data to necessary locations would be slow and difficult without data ingestion tools.

Data ingestion solutions simplify and accelerate this process. They automatically capture incoming data, allowing businesses to quickly analyze their data and make timely decisions based on current events rather than outdated information.

These tools are also flexible, as they can efficiently manage dynamic data sources, seamlessly incorporating data from new sources without requiring a complete system. This flexibility allows businesses to update and expand their data management strategies without disruption continuously. For example, if a company starts getting data from a new source, the tool can add that without starting from scratch.

Top 8 Data Ingestion Tools in 2024

Various data ingestion tools in the market offer plenty of features and cater to specific business requirements. Below is a list of some of the best data ingestion solutions and their key features.

-

Astera

Astera is an enterprise-grade data platform that simplifies and streamlines data management. From data ingestion and validation to transformation and preparation to loading into a data warehouse, it accelerates time-to-insight by automating data movement. Astera’s suite of solutions caters to unstructured data extraction, data preparation, data integration, EDI and API management, data warehouse building, and data governance.

- With Astera’s no-code platform, ingest data from various sources into your data ecosystem without writing a single line of code.

- Astera offers native connectors to databases, file formats, data warehouses, data lakes, and other sources. You can easily access and ingest data from any source, regardless of format or location.

- Astera’s built-in transformations help to clean, enrich, and transform your data. From simple data cleansing to complex data transformations, Astera prepares your data for analysis and decision-making without technical expertise.

- Astera’s intuitive UI and unified design simplify data ingestion. Easily navigate the platform, design data pipelines, and quickly execute workflows.

- Astera’s parallel processing ETL engine enables you to handle large volumes of data efficiently. It provides optimal performance and scalability, allowing you to meet the growing data demands of your enterprise.

- Astera provides award-winning customer support and extensive training and documentation to help you maximize your data ingestion efforts. The platform offers the support and resources you need, from onboarding to troubleshooting.

-

Keboola

Keboola is an ETL platform designed for performing complex tasks. It provides custom options for data ingestion. Keboola facilitates a clear view and understanding of ETL setups. The platform accommodates various stores, such as Snowflake, Redshift, etc., and allows for SQL, Python, and R transformations.

Pros

- Offers pre-built connectors to streamline data ingestion across multiple data sources and destinations.

- Users can write transformations in various languages and load or directly store the data within Keboola.

- Offers customized data sourcing for authentic analysis.

Cons

- Modifying the schema or manipulating data can be complex with internal file-based storage.

- The cross-branch change review sometimes fails to detect the changes.

- Users must manually set up the webhooks or API triggers to import event data.

-

Airbyte

Airbyte is an open-source data integration platform. It allows businesses to build ELT data pipelines. It enables data engineers to establish log-based incremental replication.

Pros

- The Connector Development Kit (CDK) allows for creating or modifying connectors in almost any programming language.

- Replicates a decent volume of data using change data capture (CDC) and SSH tunnels.

- Users can use straight SQL or DBT to transform the data.

Cons

- Scheduler sometimes interrupts jobs unexpectedly.

- Regular updates require users to install new versions often.

- Predicting usage and controlling costs become difficult as data volumes grow.

-

Matillion

Matillion ETL is a data ingestion tool allowing users to create pipelines using a no-code/low-code, drag-and-drop web interface.

Pros

- Its primary focus is on batch data processing, which is optimized for the transformation and loading phase of the ETL process within the cloud data warehouses.

- It replicates SQL tables using change data capture (CDC) by design.

- Matillion’s cloud-native transform engine scales to manage large datasets.

Cons

- It sometimes struggles to scale hardware infrastructure, particularly EC2 instances, for more resource-intensive transformations.

- Users often complain about outdated documentation with new version releases.

- Matillion struggles with collaboration. Teams larger than five face challenges working together on the same data ingestion workflows.

-

Talend

Talend is a low-code platform that collects data from different sources and transforms it for insights. The tool integrates data ingestion, transformation, and mapping with automated quality checks.

Pros

- It offers pre-built components for data ingestion from different sources.

- Users can design or reuse data pipelines in the cloud.

- It offers low-code and automated data replication.

Cons

- Talend’s software is complex, requiring learning time before using it confidently, even for simple data ingestion pipelines.

- Documentation for features is often incomplete.

- Version upgrades, capacity changes, and other common configuration tasks are not automated.

-

Hevo Data

Hevo Data is a no-code, cloud-based ETL platform designed for business users without coding skills, simplifying the data ingestion.

Pros

- The API allows easy integration of Hevo into the data workflow and enables performing pipeline actions without accessing the dashboard.

- It offers end-to-encryption and security options.

- The no-code data ingestion pipelines use a graphical UI to simplify creating ingestion workflows.

Cons

- It limits data integration into BI tools or exporting data to files through integration workflows.

- It does not offer customization of components or logic. Also, the user cannot write code on his own.

- It offers very limited data extraction sources.

-

Apache Kafka

Apache Kafka is an open-source distributed platform suitable for real-time data ingestion.

Pros

- It supports low latency for real-time data streaming.

- It can adjust storage and processing to handle petabytes of data.

- The platform ensures data persistence across distributed and durable clusters.

Cons

- It is a complex software that requires a steep learning curve to understand its architecture.

- Users face challenges while working on small data sources.

- Kafka’s replication and storage mechanisms require significant hardware resources.

-

Amazon Kinesis

Amazon Kinesis is a cloud-hosted data service that extracts, processes, and analyzes your data streams in real-time. This solution captures, stores, and processes data streams and videos.

Pros

- It offers low latency, meaning analytics applications can access streaming data within 70 milliseconds after collection.

- The Kinesis app integrates with many other AWS services, allowing users to build complete applications.

- It automatically provisions and scales resources in on-demand mode.

Cons

- It is not a suitable tool for on-premise data ingestion or multi-cloud, as it is integrated within the AWS ecosystem.

- Users must utilize separate services to analyze or store data, as it only focuses on data migration.

- It does not offer clear documentation, which is often confusing to the consumers.

How to Choose the Right Data Ingestion Platform?

Opting for the right data ingestion tool directly impacts the data management strategy of an organization. Various factors should be considered while choosing the data ingestion platform.

-

Data Sources and Formats

Businesses should consider if the tool supports connectivity with all relevant data sources, including databases, cloud services, APIs, and streaming platforms. Also, they need to verify if the tool can handle various data formats, such as structured, semi-structured, and unstructured data, to meet their specific data ingestion requirements.

-

Scalability and Performance

The scalability of the data ingestion tool is key for handling increasing data volumes without sacrificing performance. Businesses should look for features like parallel processing and distributed architectures. These can handle large datasets effectively, ensuring data is processed smoothly and quickly as the company expands.

-

Data Transformation Capabilities

It is important to evaluate the tool’s data transformation features, including data cleaning, enrichment, aggregation, and normalization capabilities. Businesses should consider the tools to perform these transformations before ingesting the data into their storage or processing systems to maintain data quality and consistency.

-

Ease of Use and Deployment

Businesses should opt for a tool that offers a user-friendly interface and intuitive workflows to minimize the learning curve for their team members. Additionally, they need to choose a tool with flexible deployment options, such as cloud-based, on-premises, or hybrid deployments, to suit their business requirements and preferences.

-

Integration and Interoperability

The right data ingestion tool seamlessly integrates with existing data infrastructure and tools. Businesses should look for pre-built connectors and APIs that facilitate integration with databases, data warehouses, BI tools, and other systems in their data ecosystem. This practice enables smooth data flows and leverages existing investments effectively.

-

Cost and ROI

Businesses should evaluate the data ingestion tool’s total cost of ownership (TCO), including licensing fees, implementation costs, and ongoing maintenance expenses. They need to consider the tool’s pricing model and calculate the potential return on investment (ROI) based on improved efficiency, faster time to insights, and better decision-making enabled by the tool.

Concluding Thoughts

Data ingestion tools play an essential role in data integration tasks by streamlining the transfer of large datasets. They help you set up a strong ingestion pipeline for managing data, saving time and effort. Utilizing a top data ingestion tool is a fundamental step in the data analytics process. These tools also enable you to monitor and improve data quality, maintaining compliance with privacy and security standards.

If you are seeking a comprehensive data ingestion tool, Astera is the right choice. Astera’s no-code, modern data integration solution can simplify and automate the process of ingesting data from multiple sources.

Schedule a demo or download a free trial of Astera to experience effortless data ingestion. Don’t wait; quickly simplify your data management to drive better business outcomes.

Start Streamlining Your Data Management Today

Schedule a demo with Astera today and see for yourself how straightforward and efficient data ingestion can be. If you're ready to experience the benefits first-hand, try Astera for free and start transforming your data workflow without any delays.

Request a Demo Astera AI Agent Builder - First Look Coming Soon!

Astera AI Agent Builder - First Look Coming Soon!