Data Virtualization: Architecture, Tools, & Features Explained

Owing to their widespread operations, enterprises resort to different types of systems that manage heterogeneous data. These systems are connected via an intricately knit data infrastructure, comprising of databases, data warehouses, marts, and lakes, storing key pieces of intelligible insights. However, facilitating data movement and extracting business insights require using a myriad of data management technologies, which can be complex to learn and manage. This is where data virtualization tools come into play.

Let’s explore the data virtualization technology and how it allows businesses to maximize the operational capabilities of their comprehensive data infrastructure.

What is Data Virtualization?

Data virtualization system creates an abstraction layer that brings in data from different sources without performing the entire Extract-Transform-Load (ETL) process or creating a separate, integrated platform for viewing data. Instead, it virtually connects to different databases, integrates all the information to provide virtual views, and publishes them as a data service, like REST. This enhances data accessibility, making specific bits of information readily available for reporting, analysis, and decision making.

By creating an abstraction layer, data virtualization tools expose only the required data to users without requiring technical details about the location or structure of the data source. As a result, organizations are able to restrict data access to authorized users only to ensure security and meet data governance requirements.

The data virtualization technology simplifies key processes, such as data integration, federation, and transformation, making data accessible for dashboards, portals, applications, and other front-end solutions. Moreover, by compressing or deduplicating data across storage systems, businesses can meet their infrastructure needs more efficiently, resulting in substantial cost savings.

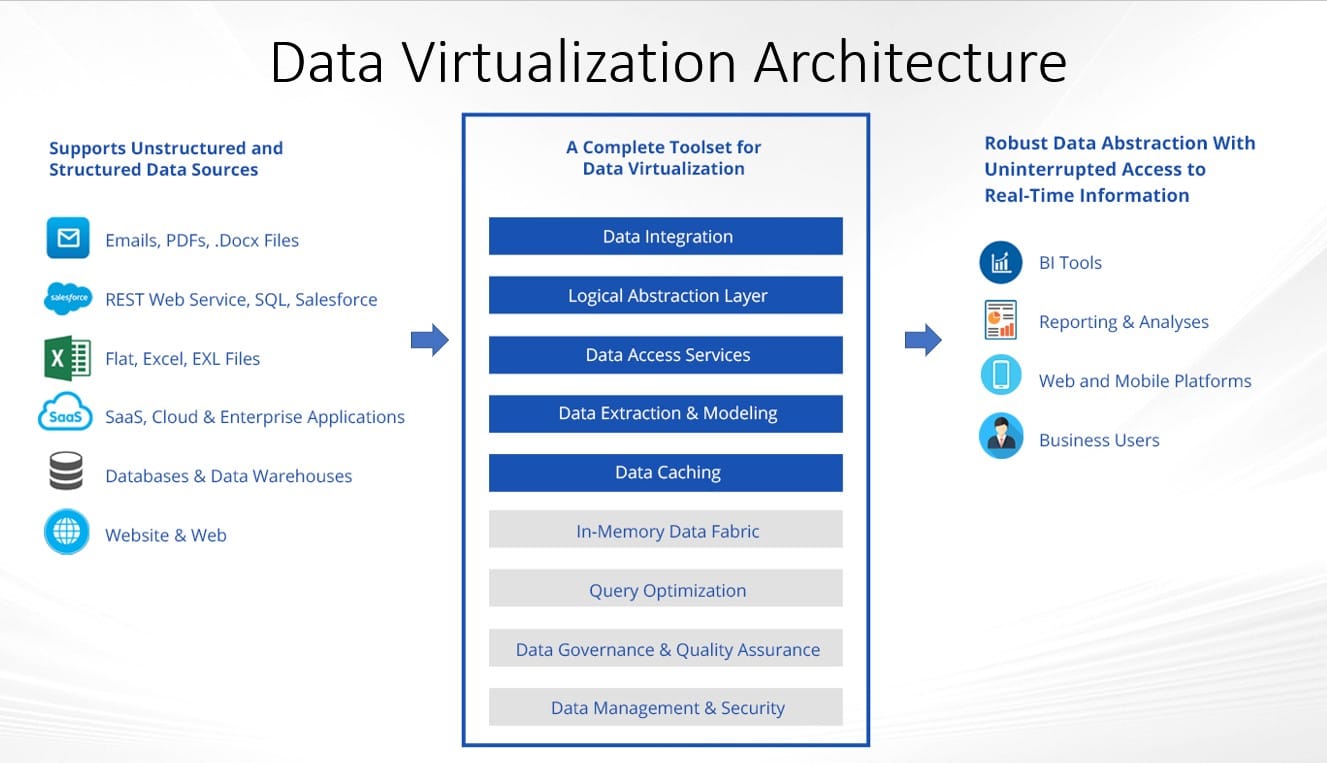

Data Virtualization Architecture

Data virtualization is used to deal with large volumes of data from diverse sources, including traditional and modern databases, data lakes, cloud sources, and other enterprise data repositories. However, the data virtualization architecture shows that integrating data sources using a logical layer is far more effective than collecting raw data on a single data lake.

Data Virtualization Architecture Explained

Instead of extracting and loading data directly onto a single platform like Enterprise Service Bus (ESB), Extract-Transform-Load (ETL), data virtualization integrates data from various sources, making it a powerful data platform. When utilized properly, a data virtualization tool can serve as an integral part of the data integration strategy. It can provide greater flexibility in data access, limit data silos, and automate query execution for faster time-to-insight.

What is Data Virtualization Layer? How Does it Work?

An important component of data virtualization architecture is the data virtualization layer, so what is a data virtualization layer?

Data virtualization is a logical data layer to integrate enterprise data available across disparate data sources. The data virtualization layer consolidates data to a single centralized layer by creating a replicated image. This allows the user to alter the source data without accessing it, allowing real-time data access for business operations, while keeping source data secure.

Businesses nowadays make data virtualization software an integral part of their approach to data management, as it allows complementing processes like data warehousing, data preparation, data quality management, and data integration.

Data Virtualization vs Data Warehouse

Data virtualization allows users to integrate data from multiple sources. This helps users to create dashboards and reports for business value. This approach is an alternative to data warehouse, where the data is collected from different sources and stores a duplicate of the data in a new data store. The main advantage of data virtualization over data warehousing is its speed optimization and real-time access: it takes a fraction of the time to build a solution and gives users real-time access to data.

The top 3 data virtualization tools are as follows:

Data Virtualization vs ETL

Although data virtualization and ETL are two different solutions, they are considered complementary technologies. As ETL/EDWdeployment can be improved by using a data virtualization technology. However, the two main differences between data virtualization and ETL are:

- ETL duplicates the data from the source system and saves it in another copied data store, on the other hand, data virtualization doesn’t engage with the source data and simply delegates the request to the source systems.

- A typical ETL/EDW project requires several months of dedicated planning and data modeling before any data consolidation in a data warehouse and once deployed it is difficult to make changes. While data virtualization is an agile approach when it comes to dealing with changes in the logical data model. It also facilitates fast development iterations.

Data Virtualization Applications for Business

Businesses can use data virtualization technology to optimize their systems and operations in several ways, such as:

- Data Delivery: It enables you to publish datasets (requested by users or generated through client application) as data services or business data views.

- Data Federation: It works in unison with data federation software to provide integrated views of data sources from disparate databases.

- Data Transformation: It allows users to apply transformation logic on the presentation layer, thus improving the overall quality of data.

- Data Movement and Replication: Data virtualization tools don’t copy or move data from the primary system or storage location, saving users from performing extraction processes and keeping multiple copies of inconsistent, outdated data.

- Virtualized Data Access: It allows you to break down data stores by establishing a logical data access point to disparate sources.

- Abstraction: It creates an abstraction layer that hides away the technical aspects, such as storage technology, system language, APIs, storage structure, and location, of the data.

Since data virtualization software offers a comprehensive set of capabilities, it has been proven useful for management, operational, and development purposes.

Data Virtualization Benefits

According to Gartner, by 2020, about 35 percent of enterprises will make data virtualization a part of their data integration strategy. Here is why enterprises are increasingly opting for data virtualization tools offering the following benefits:

- Multi-mode and multi-source data access, making it easy for business users at different levels to use data as per their requirements.

- Enhanced security and data governance for keeping critical data safe from unauthorized users

- Hiding away the complexity of underlying data sources, while presenting the data as if it is from a single database or system

- Information agility, which is integral in business environments, as data is readily available for swift decision making

- Infrastructure agnostic platform, as it enables data from a variety of databases and systems to be easily integrated, leading to reduced operational costs and data redundancy

- Simplified table structure, which can streamline application development and reduce the need for application maintenance

- Easy integration of new cloud sources to existing IT systems easily, allowing users to have a complete picture of external and internal information

- Hybrid query optimization, enabling you to streamline queries for a scheduled push, demand-pull, and other types of data requests

- Increased speed-to-market, as it cuts down the time needed to obtain data for improving new or existing products or services to meet consumer demands

Other benefits of data virtualization tools include cost savings due to fewer hardware requirements and lower operational and maintenance costs associated with performing ETL processes for populating and maintaining databases.

In addition, data virtualization tools store metadata information and create reusable data virtual layers, allowing you to experience improved data quality and reduced data latency.

Data Virtualization Examples & Use Case

According to Forrester, data virtualization software has become a critical asset to any business looking to triumph the growing data challenges. With innovations like query pushdown, query optimization, caching, process automation, data catalog, and others, data virtualization technology is making headway in addressing a variety of multi-source data integration pain points.

Here are a few database virtualization use cases and applications that show how it is helping businesses address master data management challenges:

1. Enhances Logical Data Warehouse Functionality

Data virtualization acts as a fuel for logical data warehouse architecture. The technology enables federating queries across traditional and modern enterprise data repositories and software utilities, such as data warehouses, data lakes, web services, Hadoop, NoSQL, etc. making them appear to users as if they are sourced from a single database/storage location.

In a logical data warehouse architecture, data virtualization allows you to create a single logical place for users to acquire analytical data, irrespective of the application or source. It enables quick data transfer through several commonly used protocols and APIs, such as REST, JDBC, ODBC, and others. It also enables you to assign workloads automatically to ensure compliance with Service Level Agreement (SLA) requirements.

2. Addresses Complexity of Big Data Analytics

Big data virtualization help businesses utilize predictive, cognitive, real-time, and historical forms of big data analytics to gain an edge over the competition. However, due to the increasing volume and complexity of data, businesses must adopt a wide range of technologies, such as Hadoop systems, data warehouses, real-time analytics platforms, and others to take advantage of arising opportunities.

Through data federation and abstraction, you can create logical views of data residing in disparate sources, enabling you to use the derived data for advanced analytics faster. Additionally, big data virtualization tools allow easy integration with your data warehouse, business intelligence tools, and other analytics platforms within your enterprise data infrastructure for information agility.

3. Facilitates Application Data Access

Systems and applications require data to produce insights needed for decision-making. However, one major challenge when working with applications is accessing distributed data types and sources. Moreover, you may need to write extended lines of code to facilitate sharing data assets among systems and applications. Some operations may also need complex transformations, which are only achievable through specialized techniques or tools.

For example, if you have two datasets residing in IBM DB2 and PostgreSQL, the tool will map to the target databases, automatically execute separate queries (for each database) to fetch the required data, and federate it into a single integrated platform, providing the virtual views through a semantic presentation layer. It will also perform joins, filters, or other transformations on the canonical layer to present the data in the desired format.

4. Optimizes the Enterprise Data Warehouse (EDW)

Data warehouses play a crucial role in helping enterprises handle massive amounts of incoming data from multiple sources and preparing it for query and analysis. While ETL and other traditional data integration methods are good for bulk data movement, users have to work with outdated data from the last ETL operation. Additionally, moving large volumes of data (in petabytes and zettabytes) becomes time-intensive and requires advanced, more powerful hardware and software.

Data virtualization streamlines the data integration process. It utilizes a federation mechanism to homogenize data from different databases and create a single integrated platform that becomes a single point of access for users. It offers on-demand integration, providing real-time data for reporting and analyses.

Get a Data Warehousing Tool for Your Business

Whether you want to create, design, or deploy an on-premise or cloud data warehouse, Astera DW Builder can do it for you in a code-less environment Trusted by over 300 customers across 30+ industries, Astera offers a code-free data virtualization solution to integrate, cleanse, and transform data from varied sources and makes it available for accurate data reporting and analyses.

Astera AI Agent Builder - First Look Coming Soon!

Astera AI Agent Builder - First Look Coming Soon!