Data Warehouse Testing: Process, Importance & Challenges

The success of data warehouse solutions depends on how well organizations implement test cases to guarantee data integrity. As organizations evolve, data warehouse testing becomes crucial to adhere to industry best practices.

What Is Data Warehouse Testing?

Data warehouse testing is the process of verifying the integrity, accuracy, and consistency of data stored within a data warehouse. This testing is essential because it ensures that the data collected from various sources retains its quality and preciseness when integrated into the warehouse.

The necessity of testing data warehouses cannot be overstated. It involves thoroughly validating the data integration process, which is pivotal for maintaining data quality and accuracy.

Data warehouse testing checks whether the data transferred from different sources to the warehouse is correct, complete, and usable.

Accurate data is the foundation of trustworthy analytics, which businesses and organizations rely on to make strategic decisions. Data warehouse testing enables reliable analytics and informed decision-making by maintaining data quality throughout the analytics process.

Data Warehouse Testing vs. ETL Testing

Data warehouse testing and ETL testing are intertwined but serve different purposes within the data lifecycle. ETL testing is a subset of data warehouse testing, specifically focusing on the Extract, Transform, Load (ETL) stages of data movement.

ETL testing ensures that the data extraction from source systems, transformation to fit the business needs, and loading into the target data warehouse occurs without errors and aligns with the requirements.

On the other hand, data warehouse testing encompasses a broader scope. It includes ETL testing and extends to validating the data storage, retrieval mechanisms, and overall performance and functionality of the data warehouse. This type of testing verifies that the data warehouse operates as expected and supports the business processes it was designed to facilitate.

Therefore, ETL testing is concerned with the accuracy and integrity of data as it travels from source to destination, and data warehouse testing is concerned with the end-to-end aspects of the data warehouse environment, ensuring its readiness for analytics and decision support.

Importance of Data Warehouse Testing

The data warehouse is more than just a data repository; it is a strategic enterprise resource providing valuable insights for data-driven decision-making. It consolidates data from various sources into a comprehensive platform, enabling businesses to gain a holistic view of their operations and make informed decisions.

However, the strategic value of the data warehouse is contingent on the quality of the data it contains. A study from Information System Frontiers points out that poor data quality often leads to unsatisfactory decisions. That’s why testing the data warehouse is crucial.

Data warehouse testing rigorously validates data extraction, transformation, and loading processes, data integrity, and the performance of the data warehouse. This testing finds and fixes errors early, ensuring the data is trustworthy and consistent.

Research shows that testing boosts confidence in the data warehouse, especially regarding data quality. Ultimately, data warehouse testing enables businesses to leverage the full potential of data warehouses, confidently make data-driven decisions, and stay ahead in the market.

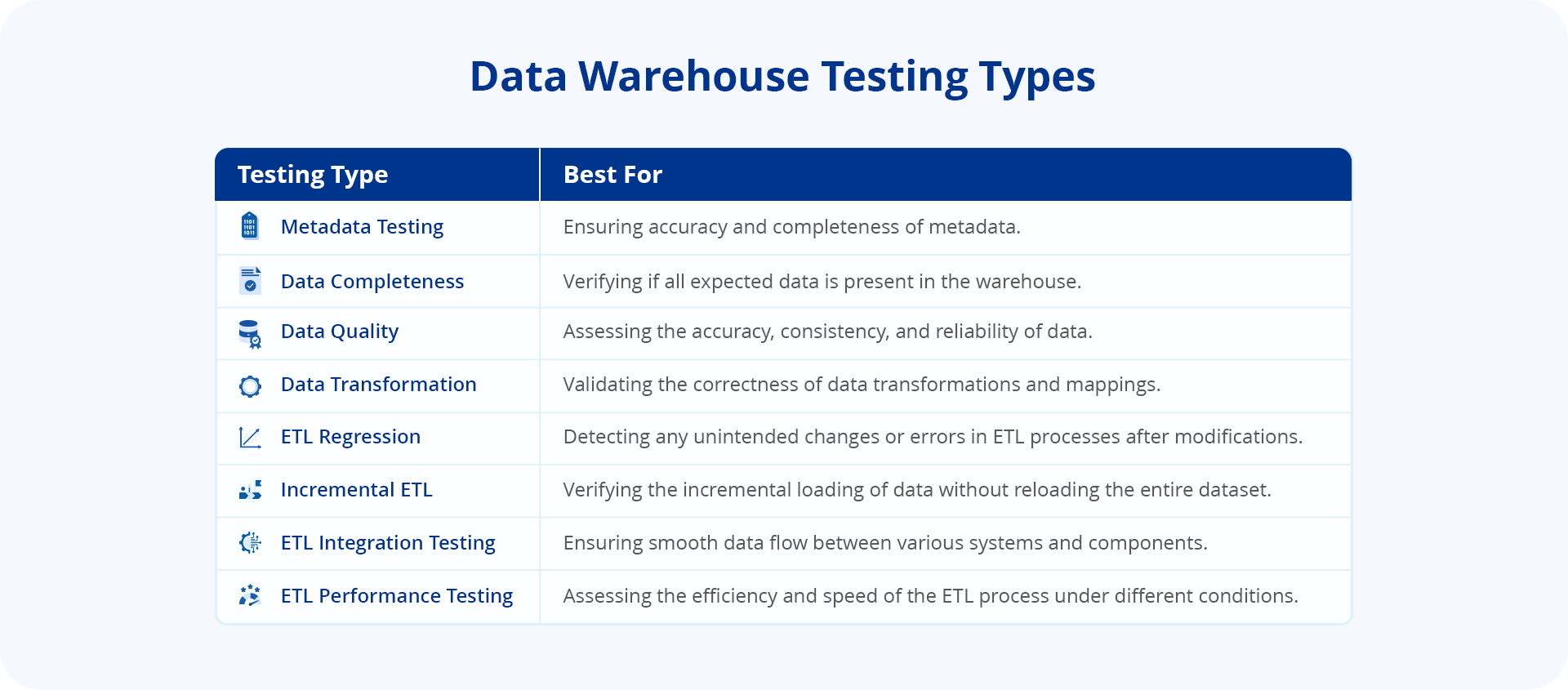

Types of ETL Tests in a Data Warehouse

Implementing robust ETL testing methodologies is essential for upholding data integrity and maximizing the value derived from the data warehouse. This table provides a high-level overview of each test type. In practice, the specifics of each test depend on the requirements of the ETL process and the characteristics of the data being handled.

| Test | Description | Example |

| Metadata Testing | Metadata testing confirms that the table definitions conform to the data model and application design specifications. This test should include a data type check, data length check, and index/constraint check. | Verifying that the data type of a column in the source matches the corresponding column in the target, ensuring consistency in data types. |

| Data Completeness Testing | Data Completeness testing ensures the successful transfer of all anticipated data from the source to the target system. Tests include, comparing and validating data between the destination and source, as well as counts, aggregates (avg, sum, min, max). | Check if all source table records have been successfully transferred to the target table without any omissions or duplicates. |

| Data Quality Testing | Data Quality tests validate the accuracy of the data. Data profiling is used to identify data quality issues, and the ETL is designed to fix or handle these issues. Automating the data quality checks between the source and target system can mitigate problems post-implementation. | Identifying and correcting misspellings in customer names during the ETL process to ensure consistency and accuracy in the target database. |

| Data Transformation Testing | Data Transformation comes in two flavors: white box testing and black box testing. White box data transformation testing examines the program structure and develops test data from the program logic/code. Testers create test cases using ETL code and mapping design documents. These documents also help them review the transformation logic. In black-box testing, users must examine application functionality without looking at internal structures for transformation testing. | White box testing involves reviewing the ETL code to ensure data transformation rules are correctly implemented according to the mapping design document. In contrast, black box testing focuses on verifying the functionality of the transformation process without considering the internal logic. |

| ETL Regression Testing | Validating whether the ETL process produces the same output for a given input before and after each change. | Run regression tests after modifying the ETL code to ensure the data output remains consistent with previous versions. |

| Incremental ETL Testing | Incremental ETL testing confirms the accurate loading of source updates into the target system. | Checking if new records added to the source database are correctly captured and loaded into the target data warehouse during the incremental ETL process. |

| ETL Integration Testing | ETL integration testing is end-to-end testing of the data in the ETL process and the target application. | Testing the entire ETL workflow, including data extraction, transformation, and loading, to ensure seamless integration with the target application. |

| ETL Performance Testing | ETL performance testing involves comprehensive end-to-end verification of the system’s ability to handle large and/or unexpected volumes of data. | Evaluating the performance of the ETL process by simulating large data volumes and measuring the time taken for data extraction, transformation, and loading operations. |

How to Test a Data Warehouse: The Process

Testing a data warehouse is critical to its development lifecycle, ensuring data integrity, performance, and reliability. These steps can help organizations establish a thorough and effective data warehouse testing process, leading to a reliable and efficient data-driven decision-making platform.

Here is an example of a retail company recently implementing a data warehouse to manage its vast transactional data, customer information, and inventory details.

Identifying Entry Points

The organization can start the data warehousing testing by pinpointing the data entry points. These entry points include data sources, ETL processes, and end-user access points. Understanding these sources helps in creating targeted test cases.

The bank can identify multiple data entry points:

- Data Sources: Customer relationship management (CRM) systems, loan processing applications, and investment tracking platforms.

- ETL Processes: Real-time data streaming and batch processing jobs that handle data extraction, transformation, and loading.

- End-User Access Points: Online banking portals, mobile apps, and internal analytics dashboards.

Preparing Collaterals

The next step is gathering all necessary test collaterals, such as data models, ETL specifications, and business requirements. These documents serve as a blueprint for the testing process.

The bank will need to gather the following collaterals:

- Data Models: Complex models representing customer demographics, financial products, and transactional relationships.

- ETL Specifications: Detailed rules and mappings govern how data is processed and integrated into the warehouse.

- Business Requirements: Critical reports and analytics that the business stakeholders need to drive decision-making.

Designing a Testing Framework

Next, organizations must develop a robust testing framework that aligns with the data warehouse architecture. This framework should cover unit testing, system testing, integration testing, and user acceptance testing (UAT).

The bank should create a testing framework designed to include:

- Unit Testing: Individual tests for each component within the ETL pipeline.

- System Testing: Holistic testing of the data warehouse’s ability to handle the entire data lifecycle.

- Integration Testing: Ensuring the data warehouse integrates seamlessly with other business systems.

- User Acceptance Testing (UAT): Validation by business users that the warehouse meets their reporting needs.

Adopting a Comprehensive Testing Approach

Implement a comprehensive testing strategy that includes:

- Data Validation: Ensure the data loaded into the warehouse is accurate, complete, and consistent. For instance, organizations can check for data accuracy and completeness against source systems.

- Transformation Logic Verification: Test cases are created to verify each business rule applied during the ETL process. The bank can ensure that all business logic, such as interest calculations and risk assessments, is applied correctly.

- Performance Testing: Load testing is conducted to assess the system’s response under heavy data loads. The bank can evaluate the system’s performance under peak load conditions and optimize query response times.

- Security Testing: Role-based access controls are tested to ensure users have appropriate permissions. The bank must verify that data security and user access controls function as intended. It should also confirm that sensitive financial data is securely stored and accessed.

Ongoing Testing

Once data warehouse testing is complete, ongoing testing throughout the lifecycle is crucial. The bank can commit to ongoing testing throughout the data warehouse lifecycle to:

- Catch Issues Early: Regular testing in the development phase to identify and fix issues promptly.

- Adapt to Changes: Continuous testing to accommodate changes in financial regulations and market conditions. For instance, automated regression testing can help ensure that new data sources or business rules don’t introduce errors.

- Maintain Quality and Performance: Scheduled testing to ensure the data warehouse’s integrity and efficiency remain high. The bank can perform periodic audits to maintain data quality and performance.

Challenges in Data Warehouse Testing

Testing a data warehouse is a complex task involving navigating through many challenges. Addressing common obstacles such as data heterogeneity, high volumes, scalability, and data mapping is essential for several reasons:

- Data Heterogeneity: With data coming from various sources in different formats, ensuring consistency and accuracy is crucial. Inconsistent data can lead to flawed analytics and business intelligence outcomes.

- High Volumes: The sheer volume of data in a warehouse can be overwhelming, making it challenging to perform comprehensive testing within reasonable timeframes.

- Scalability: As businesses grow, so does their data. A data warehouse must be scalable to handle increasing loads, which adds complexity to the testing process.

- Data Mapping: Accurate mapping of data from source to destination is vital. Errors in data mapping can result in significant discrepancies, affecting decision-making processes.

Moreover, end-to-end data flow testing becomes increasingly complex, as users must verify the entire process from data extraction at the source to its final form in the data warehouse. End-to-end data flow testing includes testing the ETL processes, data transformations, and loading mechanisms. The complexity arises due to the need to validate the integrity and accuracy of data at each stage, often requiring sophisticated testing strategies and tools.

The Role of Automated Data Integration Tools

Automated data integration tools can significantly alleviate these challenges. These tools handle data heterogeneity by transforming disparate data into a unified format. They can manage high volumes efficiently, often in real-time, ensuring that the data warehouse is always up-to-date.

Scalability is built into these tools, allowing them to adjust to varying data loads with minimal manual intervention. Moreover, automated tools provide reliable data mapping capabilities, reducing the risk of human error and ensuring that data is accurately transferred from source to destination.

Leveraging advanced features such as data profiling, quality checks, and automated data validation helps these tools streamline the testing process. They offer a more efficient and accurate approach to data warehouse testing, enabling organizations to maintain high-quality data repositories essential for informed decision-making.

According to a study by the International Journal of Recent Technology and Engineering, automated data warehouse testing can save up to 75% to 89% of the time spent on testing.

How Astera Streamlines End-to-End Data Warehouse Testing

Overcoming the challenges in data warehouse testing is not just about ensuring the system works; it’s about guaranteeing the reliability of data-driven insights that businesses rely on. Automated data integration tools like Astera play a pivotal role in achieving this goal, providing a robust solution to the complexities of data warehouse testing.

Astera is an end-to-end data management platform helps organizations implement the end-to-end testing process, making it more efficient and effective. Here are some key features that Astera offers:

- Unified Metadata-Driven Solution: Provides a no-code solution that allows for the design, development, and deployment of high-volume data warehouses with ease.

- Dimensional Modeling and Data Vault 2.0 Support: Supports advanced data warehousing concepts, enabling businesses to build scalable and flexible data storage solutions.

- Automated Data Quality Checks: Profile, cleanse, and validate data to ensure it is ready for the data warehouse using built-in data quality modules.

- No-Code Development Environment: The Point-and-click interface allows users to create and edit entity relationships without writing a single line of code.

- Data Model Deployment: Easily deploy or publish it to the server for data consumption.

- Job Scheduling and Monitoring: Robust job scheduling and monitoring features automate the data warehousing process, ensuring that your data is always up-to-date and accurate.

Leveraging these features, Astera significantly reduces the time and effort required to build and maintain a data warehouse. It’s an ideal solution for businesses integrating disparate data sources into a single source of truth and maintaining an auditable, time-variant data repository.

Ready to transform your data warehousing projects? Start the 14-day free trial with Astera today and experience the power of automated, no-code data warehousing.

Astera AI Agent Builder - First Look Coming Soon!

Astera AI Agent Builder - First Look Coming Soon!