ETL Testing: Processes, Types, and Best Practices

ETL testing is a set of procedures used to evaluate and validate the data integration process in a data warehouse environment. In other words, it’s a way to verify that the data from your source systems is extracted, transformed, and loaded into the target storage as required by your business rules.

ETL (Extract, Transform, Load) is how data integration tools and BI platforms primarily turn data into actionable insights. During an ETL process, ETL tools extract data from a designated source, transform it into a structure and format supported by the destination system, and load it into a centralized storage location, typically a cloud data warehouse or data lake.

ETL testing comprises different tests conducted at different stages of the ETL process. These tests validate and verify the data to ensure accuracy and minimize data loss. This blog offers an in-depth discussion on ETL testing and its types, its necessity, the steps it entails, and how to do it right.

What is ETL Testing?

Data now heavily impacts businesses at all levels, from everyday operations to strategic decisions. This growing role has driven the global business intelligence (BI) and analytics tools market to an estimated value of nearly $17 billion.

The data contained in a data warehouse is often your enterprise’s only source for generating insights and creating actionable strategies. Therefore, it must only contain accurate, reliable, and up-to-date data if it’s to serve as an effective single source of truth for your business.

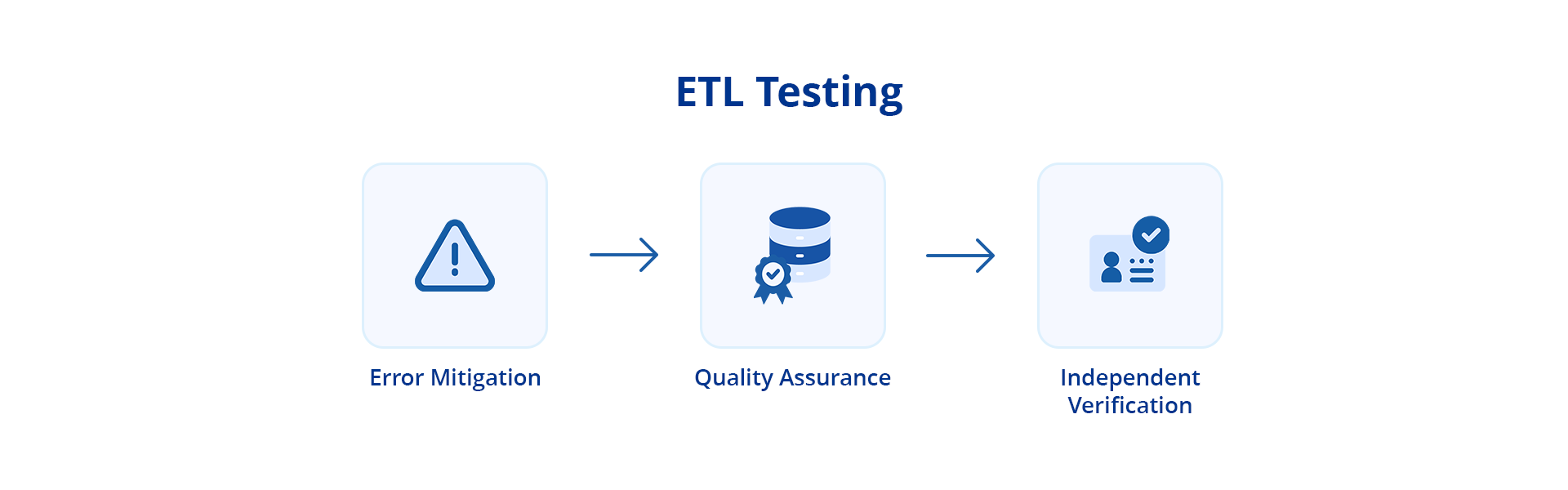

Automation significantly minimizes the likelihood of errors during ETL, which are otherwise prevalent in a manual ETL pipeline. However, automation must be supplemented with additional validation measures — and this is where ETL testing is useful for the following reasons:

- It mitigates errors left undetected by automation.

- It ensures the centralized data’s health and quality.

- It acts as an independent layer of verification, offering further assurance that the automated ETL process is functioning correctly and producing the expected results.

Database Testing vs ETL Testing

Database testing is a data validation procedure similar to ETL testing, but the similarities end there. Here are some differences between the two:

|

ETL Testing |

Database Testing |

| Typically performed on data in a data warehouse. | Generally performed on transactional systems. |

| Primary purpose is to ensure that the data being tested is moving as it’s supposed to. | Aims to ensure that all data follows the data model’s predefined rules. |

| Verifies that source counts match the counts in the destination. | Verifies the absence of orphan records. |

| Checks for duplications in the loaded data. | Checks for redundant tables, missing data in columns, and database normalization. |

Why We Need ETL Testing

You can consider skipping ETL testing if you have a simple data flow with minimal transformations, and your source and target systems are stable and reliable.

However, assessing your data processes’ complexity should be a standard practice before forgoing testing — this can help you avoid data inconsistencies and errors downstream.

There are many instances when ETL testing proves valuable, some of these include:

- After data integration or migration projects are completed.

- When loading data into a newly configured data warehouse for the first time.

- When adding a new data source to an existing data warehouse.

- During data movement and transformation.

- When there are suspected errors in ETL processes preventing them from running normally.

- When there’s a lack of confidence in data quality in either a source or the destination.

The ETL Testing Process

Testing protocols are subjective and customizable to an enterprise’s requirements and processes. As such, there isn’t a one-size-fits-all model for ETL testing. However, ETL testing typically comprises the following steps:

1. Understanding Business Requirements

Designing an effective ETL testing process requires understanding your organization’s business requirements. This involves examining its data models, business workflows, reports, sources and destinations, and data pipelines.

This understanding enables ETL testers to know what they’re testing and why.

2. Data Source Identification and Validation

In the next part of the ETL testing process, identify your source data and perform schema checks, table validation, and other initial checks. This is to ensure that the ETL testing process aligns with the requirements identified by studying your business model and workflows.

3. Creating and Executing Test Cases

Source-to-target mapping and test case design are the next steps, and often include the following:

- Transformations based on business test cases

- SQL scripts for conducting source-to-target comparisons

- Execution flows

Following the preparation and validation of these elements, test cases are executed in a staging environment. Typically, testers can’t use sensitive production data, which necessitates the availability of real-time synthetic data. You can create this data manually or through test data generation tools.

4. Data Extraction and Reporting

In the next step, perform the ETL tests according to business requirements and use cases. During test case execution, identify the different kinds of errors or defects, try to reproduce them, and log them with adequate details and screenshots.

In the reporting stage, you can record defects onto specialized Defect Management Systems and assign them to designated personnel for fixing.

For successful resolution of errors, analysts need to provide the following:

- Sufficient documentation for reproducing the test case

- Relevant screenshots

- A comparison between the actual and expected results for each test case

5. Applying Transformations

Next, you must ensure that data is adequately transformed to match the destination data warehouse’s schema. Besides validating the data flow, you’ll also check the data threshold and alignment. The goal here is to verify that the data type for each table and column matches the mapping document.

6. Loading Data into The Data Warehouse

You’ll do a record count check both before and after moving the data from the staging environment to the data warehouse. Verify rejection of the invalid data and acceptance of the default values.

7. Re-Testing the Bug (Regression Testing)

After fixing the bug, retest it in the same staging environment to verify that it doesn’t have any traces left. Regression testing also helps ensure that no new defects occur while correcting the previous one.

8. Summary Report and Test Closure

In the final step, close reports detailing the defects and test cases with comments and all related documentation. Before closing the summary report, test its options, filters, layout, and export functionality.

The summary report details the testing process and its results, and lets stakeholders know if and why a step wasn’t completed.

Types of ETL Testing

Each step of the ETL testing process involves different types of tests, some of which are:

1. Production Validation and Reconciliation

This test validates the order and logic of the data while it’s loaded into the production systems. It compares the production system data with the source data to prevent non-compliance, data errors, or faults in the schema.

2. Source-to-Target Validation

This test checks that the source system data count matches the data loaded in the destination system/warehouse.

3. Metadata Testing

This test matches the data types, indexes, lengths, constraints, schemas, and values between the source and target systems.

4. Completeness Testing

This verifies that all source data is loaded into the destination system without duplication, repetition, or loss.

5. Transformation Testing

When multiple transformations are applied to one type of data, this test helps confirm that all data has transformed consistently based on the applicable rules.

6. Accuracy Testing

Following the completion of all transformations, the data’s accuracy is verified. There can be changes in the data’s formats and schema, but the information and its quality should not change during the transformations.

7. Data Quality Testing

This testing type focuses on data quality to identify invalid characters, precisions, nulls, and patterns. It reports any invalid data.

8. Report Testing

This type of testing checks the data in the summary report, determines if the layout and functionality are appropriate, and performs calculations for additional analytical requirements.

9. Application Migration Testing

Application migration testing verifies whether the ETL application is functioning properly following migration to a new platform or box.

10. Data and Constraint Checks

This testing technique checks the datatype, length, index, and constraints.

Common Challenges in ETL Testing

There are several factors that can disrupt or adversely affect the ETL testing process. Below are the challenges that ETL testers encounter the most:

- Complex Data Transformations and Processes: Applying multiple transformations to expansive datasets can be a complicated and time-intensive procedure. The same happens when too many complex data integrations and business processes exist.

- Poor Data Quality: ETL testing requires accurate, clean, and high-quality data for the best results. Poor input data quality can affect the reliability of the process.

- Resource-Intensive: ETL testing can become resource-intensive when large, complicated source systems are involved.

- Decreased Performance: Big data volumes can hinder processing or end-to-end performance, ultimately affecting data accuracy and completeness.

- Changes in Data Sources: Any changes in the data sources can potentially impact data accuracy, completeness, and quality.

- Personnel Requirements: Enterprises need people with experience in ETL and data expertise to ensure the design and implementation of robust ETL testing processes.

ETL Testing Best Practices

The following best practices can help you optimize your ETL testing processes:

1. Working with Business Test Cases

It’s not enough to develop a functional ETL testing process. It also needs to cater to varying business requirements that are different for every organization. An ETL testing process should complement your existing workflow, not disrupt it.

2. Using Clean Source Data

Clean source data is a fundamental requirement for an effective ETL testing process. Rather than leaving it for the end, you should start ETL testing with clean source data to save time and obtain better results.

3. Testing for Efficiency

Working with BI tools necessitates consistent access to updated data, so you should ensure both data completion and quick data delivery. Optimize the ETL testing process for accelerated testing and speedy results.

4. Automation

While full automation is ideal, even partial automation is better than zero automation. Automated tools like Astera allow you to streamline the ETL testing process while resolving the common challenges associated with manual work.

Experience Faster and More Reliable ETL Automation

Astera's all-in-one ETL solution is what your enterprise needs for streamlined ETL testing. Ensure top-notch data quality at all times while enjoying no-code convenience. Get started today!

Sign Up for a DemoChoosing The Right ETL Testing Tool

Here are a few factors you should keep in mind when choosing an ETL testing tool for your business:

- Intuitive Interface

An intuitive interface makes it easy for you to design and implement ETL process. Look for a graphical interface with drag-and-drop functionality for enhance ease-of-use and accessibility.

- Automated Code Generation

Automated coding can eliminate the effort and time it takes for manually coding and developing processes. It can also significantly reduce errors.

- Built-in Data Connectors

Your ETL testing tool of choice should be equipped with built-in data connectors. These connectors can improve data access and make it easier to work with your go-to file formats, databases, legacy systems, or packaged applications.

- Content Management Features

Content management capabilities enable rapid context switching between ETL development, testing, and production environments.

- Debugging Features

An ETL testing tool with powerful debugging features can help you keep up with data flows in real time and quickly create reports on row-by-row behavior.

Conclusion

Accurate data analysis is crucial for any organization wanting to leverage its data to get ahead in its industry. When done right, ETL testing can boost an organization’s confidence in its data by improving its integrity and reliability. The resulting business intelligence is sound and helps mitigate business risk.

Additionally, automated ETL testing tools boost a data warehouse’s stability and performance faster than manual coding, make the ETL process more organized and manageable, and enable the rapid identification and correction of anomalies in the ETL process. Businesses today are moving away from manual ETL testing and integrating automated ETL testing solutions into their workflow to reap these benefits.

Yours can too.

Astera is an ETL automation solution with built-in features for ETL testing, integration, validation, transformation, and more. Its ETL automation and advanced profiling capabilities allow users to reconcile data at every step of the ETL process easily. Verifying data quality and identifying errors is easier with Astera’s simple, no-code, drag-and-drop interface.

Find out how Astera can transform your ETL procedures and help you maintain data quality for accurate analyses. Reach out to our team or start your FREE trial today.

Astera AI Agent Builder - First Look Coming Soon!

Astera AI Agent Builder - First Look Coming Soon!